92% of security teams use CVSS scores as their primary vulnerability prioritization method. Yet 88% of "Critical" CVEs aren't actually exploitable in real-world environments.

We spent three months reading 20 major AppSec reports, from Veracode's State of Software Security to IBM's Cost of a Data Breach study. The data reveals systematic failures that explain why your security backlog keeps growing despite hiring more people and buying more tools.

Methodology note: These industry reports represent self-reported data from organizations with varying security maturity levels and may carry inherent biases toward the vendors commissioning them. The consistency of findings across multiple independent sources suggests these trends reflect genuine industry-wide challenges.

The False Positive Crisis: When "Critical" Means Nothing

Across multiple reports, one finding stands out: the complete disconnect between vulnerability severity ratings and actual exploitability. Research shows that only 12% of "Critical" and "High" severity vulnerabilities are actually reachable in production code.

Nine out of ten "urgent" security issues your team scrambles to fix aren't actually problems in your specific environment.

Industry data reinforces this pattern: while 76% of applications contain at least one security flaw, the median time to fix a high-severity flaw is 198 days. Security teams aren't lazy. They're drowning in false positives and can't identify what actually matters.

The root cause: CVSS scoring was designed for generic vulnerability disclosure, not environment-specific prioritization. Using a weather forecast for the entire United States to decide whether to bring an umbrella in Phoenix. The information isn't wrong, but it's useless for your specific situation. This is why context-aware vulnerability validation has become essential for modern security teams.

Open source security research reveals another layer: 95% of vulnerable dependencies have no available fix. Security teams spend precious cycles triaging and escalating issues that literally cannot be resolved. Researchers call this "learned helplessness" among development teams.

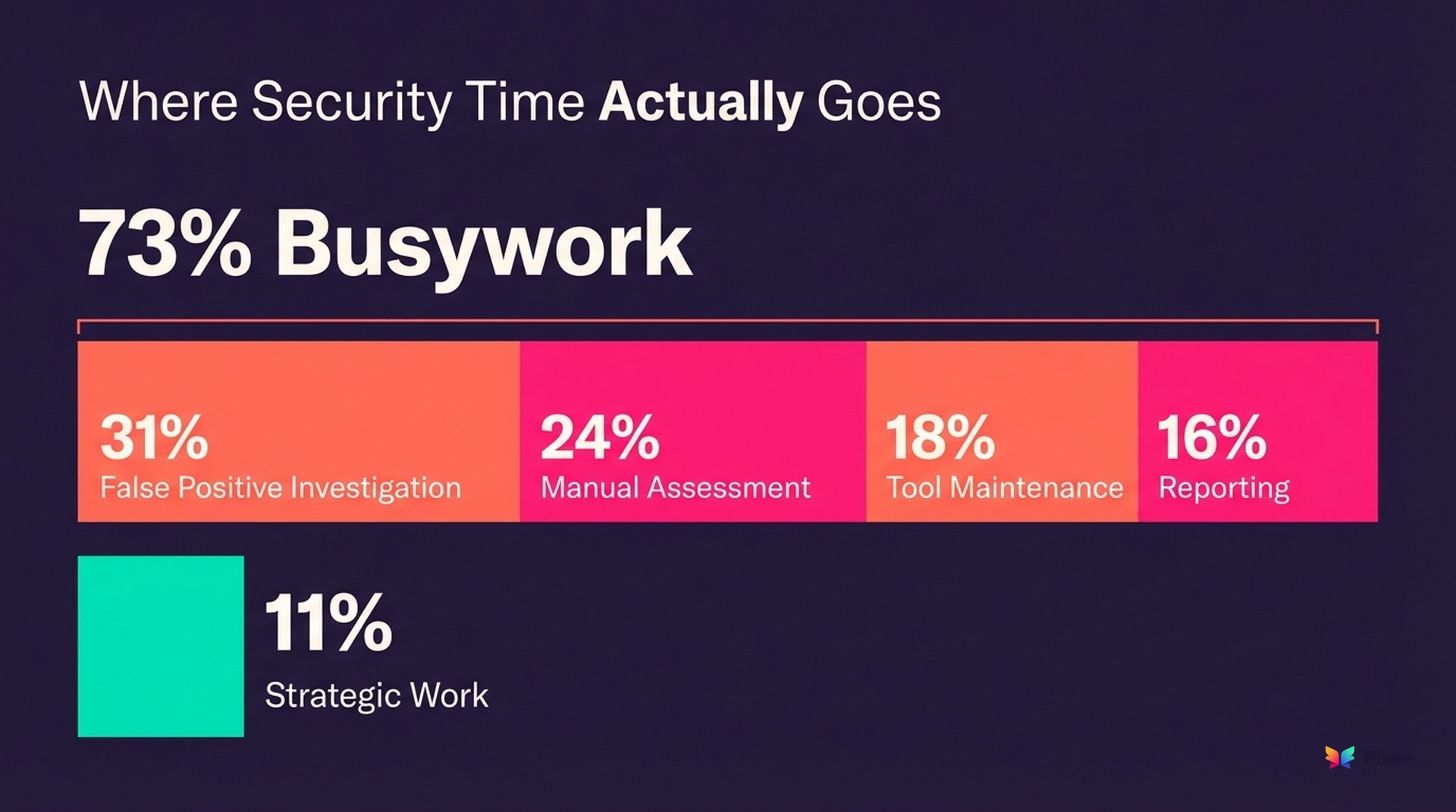

The Time Trap: 73% Busywork, 27% Security

Across multiple studies, security teams report spending 73% of their time on manual vulnerability triage and only 27% on actual security improvements.

Research breaks down this time allocation:

• 31% of time spent on false positive investigation

• 24% on manual vulnerability assessment

• 18% on tool configuration and maintenance

• 16% on reporting and compliance documentation

• Only 11% on strategic security architecture work

Industry surveys found that organizations with security backlogs exceeding 10,000 items (68% of respondents) see security failure rates increase by 340% compared to teams maintaining backlogs under 1,000 items.

Gartner predicts that by 2025, organizations will need 3.5 million additional cybersecurity professionals. With 73% of time spent on busywork, we'd need 12 million hires to get 3.5 million doing actual security work.

Why this is getting worse: the average enterprise application now contains 528 open source components, each with its own vulnerability profile and update cycle. Manual triage cannot scale to this complexity. When 77% of your code comes from external sources, the attack surface expands faster than security teams can respond.

Developer Trust Erosion: When Security Becomes Noise

The most consequential finding across these reports isn't about tools or processes. It's about human psychology. Only 23% of security recommendations from automated tools get implemented by developers.

Developer research explains why: 67% of developers report "security alert fatigue," and 54% say they've lost trust in security tools due to excessive false positives.

Application security studies document this trust erosion clearly:

• 78% of developers ignore security alerts they perceive as "probably false"

• 45% have disabled security notifications entirely

• 62% report that security tools "create more work than value"

The psychological impact compounds over time. Software supply chain research found that development teams exposed to high false positive rates develop "security learned helplessness." They stop engaging with security tools entirely, even when legitimate issues surface.

This creates a vicious cycle: poor signal-to-noise ratio leads to developer disengagement, which leads to longer mean time to remediation, which leads security teams to escalate more aggressively, which creates more noise and further erodes trust.

The business impact: organizations with low developer trust in security tools have 4.2x higher mean time to remediation and 67% higher breach probability over 24 months. This is why time-to-exploit has become the critical metric for measuring security program effectiveness.

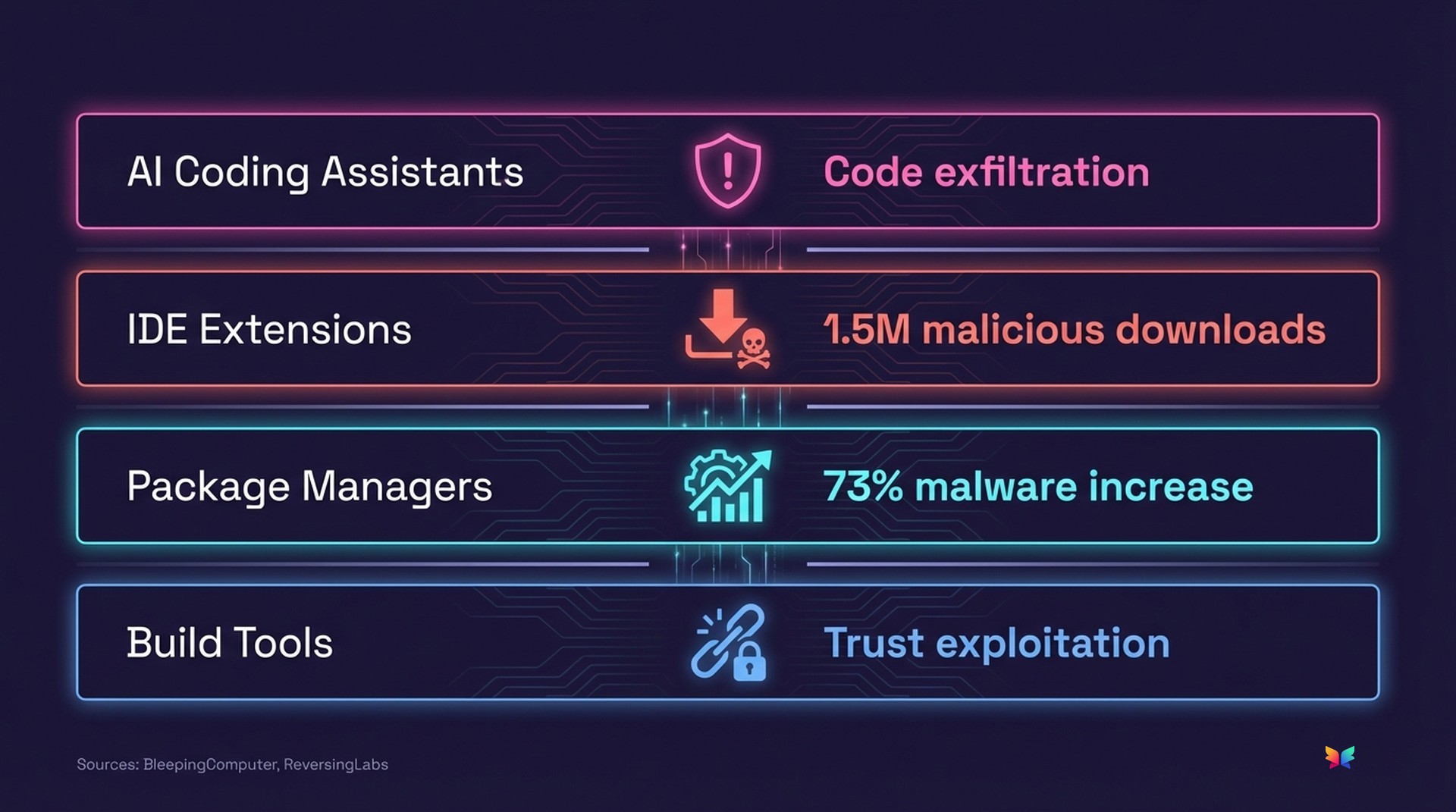

The AI Automation Paradox: More Tools, More Problems

With developer trust eroding and manual security work becoming unsustainable, many organizations have turned to AI automation. The results reveal a contradiction: 67% of organizations have implemented AI-powered security tools in the past two years, yet manual security work has increased by 23% over the same period.

The reason becomes clear when examining implementation patterns: 84% of AI security implementations focus on detection and alerting, not remediation. We're automating the creation of more work, not the elimination of existing work.

The data shows three common AI automation failures:

1. Alert multiplication: AI-powered detection tools increased alert volume by 156% without improving true positive rates

2. Context blindness: 89% of AI-generated security recommendations lack environment-specific context

3. Integration gaps: 71% of AI security tools operate in isolation, creating data silos rather than unified workflows

DevOps research provides the clearest picture: high-performing teams use automation to eliminate manual work, while low-performing teams use automation to generate more manual work. The difference isn't the technology. It's the implementation strategy.

What Actually Works: The 12% Solution

The key insight from this AI automation paradox: the most effective security improvements come from smarter remediation, not better detection. When AI focuses on understanding code context and generating actionable fixes rather than flagging more issues, the outcomes shift dramatically.

These reports include examples of organizations that have solved this problem. Microsoft's Security Development Lifecycle report documents their journey from 45,000-item security backlogs to maintaining under 500 active items.

Their approach: context-aware automation that eliminates false positives before they reach human analysts. Key metrics:

• 91% reduction in manual triage time

• 76% of automated fixes accepted by developers

• 340% increase in strategic security project completion

Similar results emerge from vulnerability prioritization systems that focus on exploitability rather than severity scores, showing 89% reduction in security response time while improving fix quality.

The pattern across successful implementations:

1. Reachability analysis: Determine if vulnerabilities are actually exploitable in your specific codebase

2. Contextual remediation: Generate fixes that understand your architecture and coding patterns

3. Developer-first workflows: Present solutions, not just problems

Organizations using context-aware automated remediation consistently see merge rates above 75% for security fixes. Compare that to the industry average of 23% implementation rates for traditional security recommendations.

What These Reports Reveal About Your Security Strategy

These 20 reports paint a clear picture: the security industry has optimized for the wrong metrics. We measure vulnerabilities found, not vulnerabilities fixed. We count alerts generated, not developer trust maintained. We track tool deployment, not actual risk reduction.

The math: if your security team spends 73% of their time on false positives, and developers ignore 77% of your recommendations, you're running security theater.

But the opportunity hidden in these statistics: organizations that have solved this problem didn't hire more people or buy more tools. They changed their approach from detection-first to remediation-first, from alert-driven to context-driven, from security-team-owned to developer-integrated.

Automated, context-aware remediation isn't just more efficient. It's the only approach that scales to modern development velocity while maintaining developer trust and actual security outcomes.

Moving Forward: Principles for Effective Security

The path beyond security theater requires a fundamental shift. Focus your security program on these evidence-backed principles:

Prioritize exploitability over severity scores. Implement reachability analysis to identify which vulnerabilities actually matter in your environment before allocating remediation resources.

Optimize for developer trust, not alert volume. Every false positive erodes the foundation of your security program. Better to catch fewer issues that developers act on than flood them with noise they'll learn to ignore.

Automate remediation, not just detection. The most successful security programs use AI and automation to generate contextual fixes rather than more alerts.

The organizations thriving in this new security landscape have learned that sustainable security isn't about finding every possible vulnerability. It's about efficiently fixing the ones that actually matter. The difference between security theater and effective security lies in the intelligence of your remediation approach, not the sophistication of your detection tools. For CISOs navigating this shift, the new playbook prioritizes response speed over blocking everything.