Beyond the Black Box: How Pixee Validates AI-Powered Vulnerability Triage

Every CISO faces the same paradox large backlogs of vulnerabilities and AI code generation escalating code velocity increasing the need and pressure to use AI on the security side to keep up.

The challenge with AI adoption here is that accepting "trust the black box" isn't an option when you're accountable for every missed vulnerability and every hour developers waste chasing ghosts.

Ultimately, the question isn't whether to use AI for vulnerability triage. It's whether the AI system making security decisions has been validated with the same rigor you'd demand from any production system handling risk.

The Non-Determinism Challenge

Large language models are non-deterministic by design. Temperature settings, sampling methods, and model updates mean the same vulnerability in the same code can produce different triage decisions each time the analysis runs. This isn't a bug. It's fundamental to how LLMs work.

In most software contexts, non-determinism is manageable. In security contexts, "it depends" creates accountability problems. When your SAST tool flags a SQL injection vulnerability, you need a consistent, auditable answer: true positive or false positive. Not "probably true positive, but run it again to be sure."

Traditional security tools are deterministic. Same input, same output, every time. Predictable. Testable. Auditable. When they make mistakes, you can trace the exact rule logic that failed and fix it.

AI-powered triage tools introduce a different risk profile. The model that evaluated 10,000 findings yesterday might evaluate them differently today after a provider updates the foundation model. The triage decision that marked a hardcoded credential as a false positive in test code might flag it differently when the same code pattern appears in a production context.

The stakes are straightforward. False negatives mean missed vulnerabilities reach production. False positives mean developers waste time investigating benign code, eroding trust in your security program. Both failures have costs you measure in incident response hours or developer productivity loss.

Pixee's Solution: Pre-Deployment Benchmark Validation

Pixee treats vulnerability triage as a production AI system making security-critical decisions that require validation before deployment.

Before any triage analyzer ships to customers, it runs against a benchmark suite of real vulnerabilities with verified ground truth labels. Every model we consider recommending to our customers, such as gpt-4o for fix generation or o3-mini for complex reasoning tasks, triggers re-evaluation against the same benchmark. No deployment without meeting production accuracy thresholds.

This isn't Pixee-specific innovation. It's standard practice in AI engineering. Companies deploying production AI systems for fraud detection, medical diagnosis, or autonomous driving all validate models against benchmark datasets before production deployment. The methodology is called MLOps—the discipline of treating machine learning models with the same rigor as any production system. This approach to AI-powered remediation ensures that models understand code context deeply before making security decisions.

What makes this uncommon in the security tools market is that many vendors treat AI as a feature to ship fast, not a system to validate thoroughly. "AI-powered" becomes a marketing label, not an engineering commitment. CISOs inherit the validation burden—running pilots, comparing outputs, hoping the accuracy holds when the vendor updates their model next month.

Pixee's benchmark approach inverts this. The vendor validates accuracy before shipping. Customers receive evidence of validation, not promises. When a model upgrade happens, re-validation happens first. Production accuracy is the vendor's responsibility, not the customer's detective work.

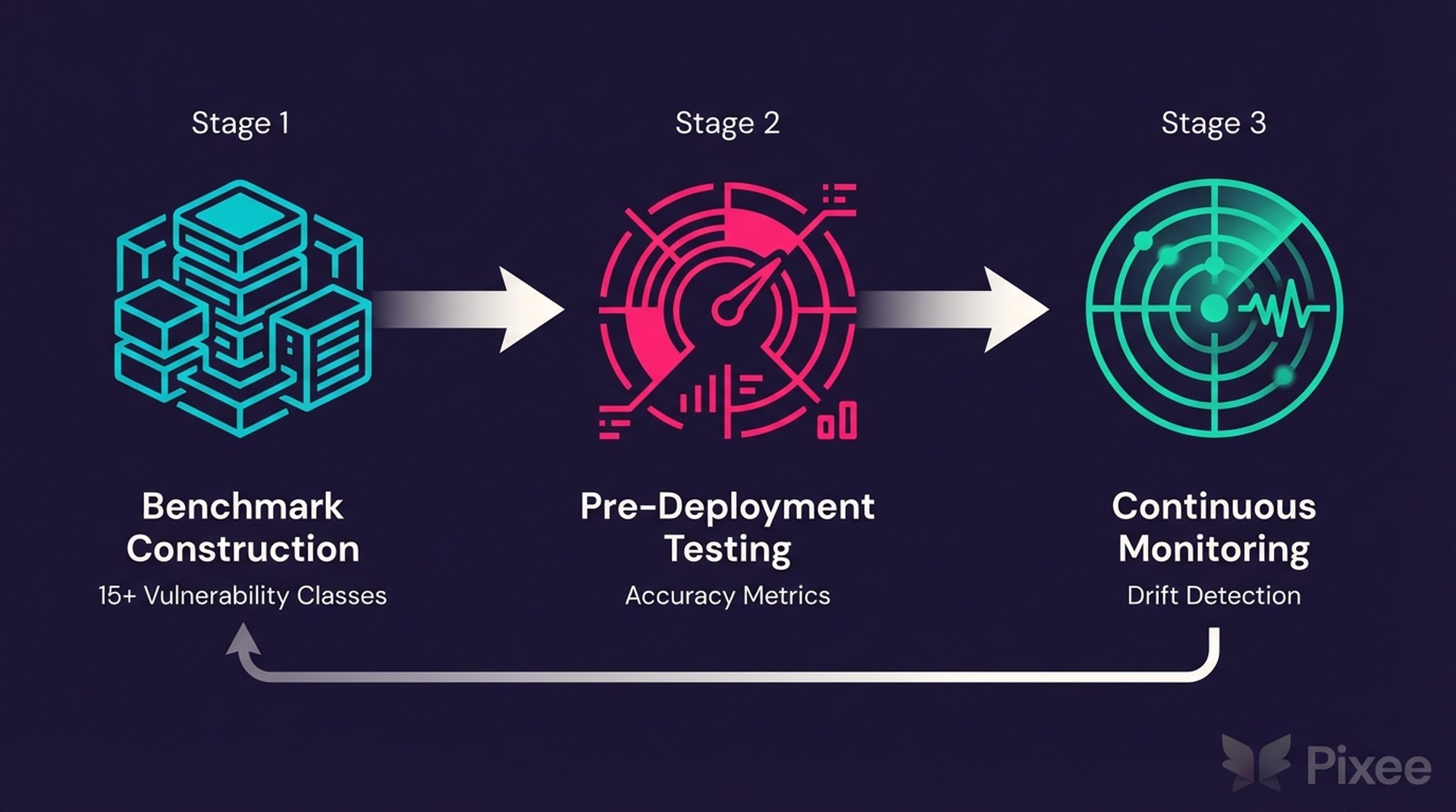

The Validation Process: Three-Stage Rigor

Stage 1: Benchmark Suite Construction

The foundation is a curated dataset of vulnerabilities with known ground truth. Not synthetic examples—real SAST findings from production codebases, each manually verified by security engineers.

The benchmark covers 15+ vulnerability classes: SQL injection, cross-site scripting, command injection, path traversal, hardcoded credentials, insecure cryptography, and others. Each vulnerability type includes true positives (real security issues) and false positives (benign code flagged incorrectly).

Edge cases matter most. Test code that looks vulnerable but isn't production-critical. Intentionally vulnerable applications built for security training. Code with security controls present—input validation, parameterized queries, allowlist checks—that mitigate the flagged issue. These are the scenarios where triage gets hard, where AI models make mistakes, and where benchmark validation catches problems before customers do.

The benchmark isn't static. As Pixee's triage system encounters new patterns—new SAST tools, new vulnerability types, new customer codebases—the benchmark grows. Monthly updates ensure the validation dataset reflects current production reality.

Stage 2: Pre-Deployment Testing

Before a triage analyzer ships, it runs the full benchmark suite. Pixee measures standard AI evaluation metrics: accuracy (overall correctness), precision (true positive rate), and recall (false negative rate). These metrics break down by vulnerability class because an analyzer that's 95% accurate on SQL injection but 60% accurate on deserialization issues isn't production-ready.

Failure modes matter as much as aggregate scores. When the analyzer makes mistakes, engineers analyze why. Was the code context insufficient? Did the model miss a security control? Did a specific SAST tool's output format confuse the analyzer? Root cause analysis drives fixes before deployment.

Model upgrades trigger mandatory re-validation. When OpenAI releases GPT-4o or Anthropic ships Claude Sonnet 4.5, Pixee doesn't assume backward compatibility. The entire benchmark suite runs again. Foundation model improvements don't always transfer to specialized tasks like vulnerability analysis. Sometimes accuracy improves. Sometimes it degrades. Benchmark testing catches regression before customers experience it.

Deployment happens only after accuracy meets production thresholds. What's the threshold? Pixee doesn't publish exact numbers (competitive intelligence), but the principle is clear: the analyzer must outperform manual triage by security engineers on the same benchmark dataset. If human experts achieve 85% accuracy, the AI must exceed that consistently.

Stage 3: Continuous Monitoring

Production deployment isn't the end of validation—it's the beginning of monitoring. Pixee tracks triage accuracy in production through multiple channels.

Customer feedback provides ground truth. When security engineers override Pixee's triage decisions—marking a false positive as a true positive or vice versa—that signal feeds back into accuracy measurement. High override rates on specific vulnerability types trigger investigation. Is the analyzer wrong? Is the SAST tool producing ambiguous findings? Is customer code using patterns the benchmark didn't cover?

Monthly benchmark re-runs detect model drift. Foundation model providers update models continuously. OpenAI's GPT-4o today might behave differently than GPT-4o six months from now. Scheduled re-validation catches accuracy degradation before it becomes a customer problem.

Rapid response matters. If production monitoring detects an accuracy issue—say, a spike in false negatives for a specific vulnerability type—Pixee's engineering team investigates immediately. Root cause analysis, benchmark expansion, analyzer refinement, re-validation, and deployment follow a defined incident response process.

Why This Matters: Production-Ready vs. Experimental AI

The security tools market is flooding with "AI-powered" products. Foundation model APIs are accessible. Integrating ChatGPT or Claude into a security tool takes weeks, not months. Vendors add "AI" to their feature lists, ship fast, and let customers discover accuracy problems in production.

This is the experimental AI approach: iterate publicly, fix issues reactively, accept that early customers experience lower quality. In some markets, this works. Early adopters tolerate instability for competitive advantage.

Security isn't one of those markets. CISOs are accountable for every vulnerability that reaches production and every false positive that wastes developer time. "We're iterating on our AI" doesn't satisfy a board after a breach. "Our model is learning from your code" doesn't justify developer teams ignoring your security findings.

Pre-deployment validation is the difference between "AI-powered" as a marketing claim and "production-ready AI" as an engineering commitment. It's treating AI as a safety-critical system that requires evidence of validation before deployment, not an experiment customers help debug.

Regulatory scrutiny is increasing. The EU AI Act classifies security tools as high-risk AI systems requiring conformity assessment. The SEC is asking CISOs to explain how they validate third-party AI tools. Vendor risk management questionnaires now include sections on AI validation methodology. "Trust us, the AI works" isn't an acceptable answer.

Pixee's multi-tier triage system—structured analyzers, ReACT agents, and adaptive generation—all follow this validation methodology. The structured analyzers (deterministic, rule-based) validate against benchmarks. The ReACT agents (dynamic investigation with tool calls) validate against benchmarks. The adaptive analyzers (generated on-the-fly for unknown rules) validate against benchmarks. Production-ready means every component meets the same standard.

The Outcome: Confidence Without Compromising Coverage

Pre-deployment validation delivers three outcomes CISOs care about: accuracy, transparency, and coverage.

Accuracy: Pixee eliminates 70-80% of false positives before findings reach developers. This isn't marketing—it's measured against benchmark datasets and validated in customer production environments. False positive reduction matters because it determines whether developers trust your security program or ignore it.

Transparency: Every triage decision includes justification, confidence score, and evidence. When Pixee marks a finding as a false positive, the audit trail shows which security controls were detected, which code patterns were analyzed, and how confident the model is. No black box. No "trust the AI." Auditable decisions that satisfy compliance requirements and internal review processes.

Coverage: Benchmark-validated triage works across 10+ SAST tools and any scanner producing SARIF output. Pixee doesn't require you to change detection tools to get validated remediation. CodeQL, SonarQube, Checkmarx, Veracode, Snyk—validated triage works on all of them because validation happens on the triage logic, not the scanner input format.

The speed matters too. Benchmark-validated triage runs in seconds, not the hours manual review requires. A security engineer reviewing 10,000 findings manually at 15 seconds per finding spends 42 hours. Pixee's triage system processes the same scan in minutes. Scale without accuracy compromise is the point.

If you're interested in learning more, we're happy to show you exactly how the product works.