From 'Block Everything' to 'Respond Fast': The CISO's New Playbook for AI Security

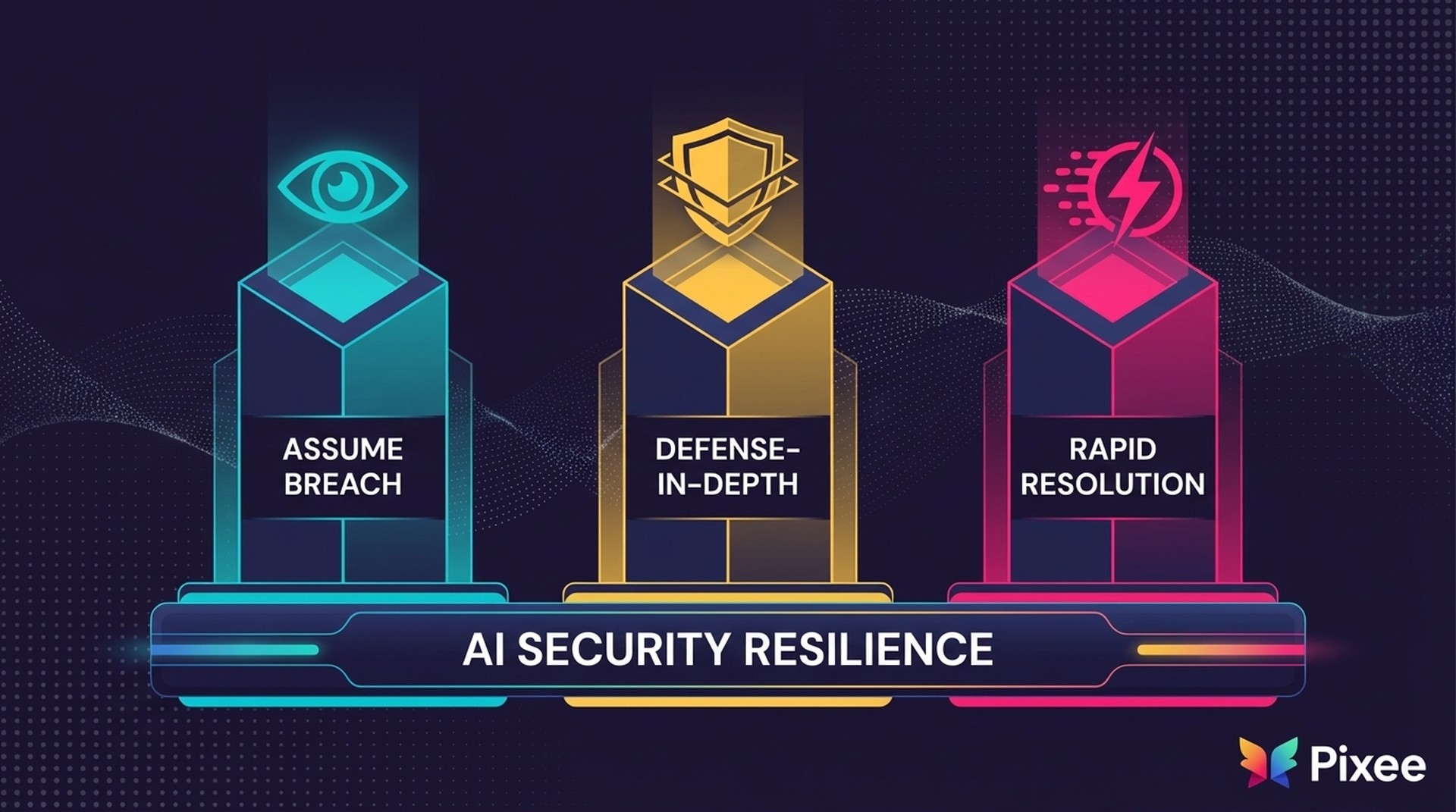

The UK NCSC's December 2025 guidance declares prompt injection "may never be totally mitigated." Security leaders who understand what this means are already shifting budgets from prevention tools to response capabilities. Three operational pillars—assume breach, defense-in-depth, rapid resolution—replace the prevention-only mindset. The competitive advantage goes to organizations that build resolution capability matching their detection capability.

The NCSC Reality Check

The UK's National Cyber Security Centre doesn't issue guidance lightly. When the NCSC declares that prompt injection attacks "may never be totally mitigated" in their official LLM security guidance, security leaders need to pay attention. The agency is telling you to stop chasing perfect prevention and start building response muscle.

The guidance, published in December 2025, represents a fundamental shift in how authoritative bodies think about AI security. Rather than promising that perfect prevention is achievable with the right tools or configurations, the NCSC acknowledges what security researchers have known for years: large language models lack the architectural separation between data and instructions that makes traditional injection attacks preventable.

This should sound familiar. SQL injection has persisted for over 20 years despite widespread awareness, extensive tooling, and mature mitigation strategies. The vulnerability continues to appear in OWASP's Top 10 not because developers don't know about it, but because the challenge of ensuring complete input sanitization across complex application stacks remains fundamentally difficult.

While SQL injection persists, the industry has learned to live with it. The same trajectory may perhaps be true of prompt injection as well.

Why the Prevention-Only Mindset Fails for AI

The NCSC's guidance reflects a technical reality. LLMs process instructions and data in the same context, without clear separation. You can't patch this out. It's how the models work.

Traditional AppSecAI Agentic SecurityArchitectureStrict separation between data and instructionsData is the instruction setPrimary DefenseInput sanitization, parameterized queriesMulti-agent monitoring, behavioral anomaly detectionStrategyBlock at the perimeterAssume breach; contain the blast radiusSuccess MetricAttacks preventedTime to containment (MTTR)

Consider what happened in December 2025. Google announced it was deploying a second AI agent specifically to monitor their Gemini-powered Chrome browsing agent after acknowledging the primary agent could be tricked through prompt injection. They call it a "user alignment critic." This is a completely separate model isolated from untrusted content that vets every action before execution.

It's pretty meta. One of the world's leading AI companies, with unlimited resources for security engineering, concluded that the most practical defense against prompt injection in their AI agent is another AI agent watching the first one. To us, what this means it that Google isn't treating this as a temporary workaround. They're building detection and response into the architecture. They've accepted prevention has limits.

The Three Pillars of the New Playbook

Data and instructions can't be separated in LLMs. That makes perfect prevention impossible. Response speed becomes the primary success metric. Everything that follows flows from this velocity gap between AI-driven threats and human-speed remediation.

Specifically the NCSC guidance emphasizes "reducing the risk and the impact" of prompt injection attacks through defense-in-depth strategies. For CISOs, this translates to a three-pillar operational framework that shifts from prevention-only to resilience-first thinking.

Pillar 1: Assume Breach for AI Systems

Zero Trust principles have become standard for network security. The same assumption needs to apply to AI deployments. Assume your LLMs will be successfully attacked, and design accordingly.

In practice, this looks like continuous monitoring of AI agent behavior (not just perimeter defense), behavioral anomaly detection that identifies when an agent's actions deviate from expected patterns, and agent-to-agent attack scenarios in your threat model. That last point is particularly important—researchers recently discovered that one AI agent can inject malicious instructions into conversations with other agents, hiding them among benign requests and responses.

The assume-breach mindset shifts security investment from "How do we keep attackers out?" to "How quickly can we detect and contain incidents when they occur?"

Pillar 2: Defense-in-Depth Layering

The NCSC's guidance explicitly recommends multiple defensive layers. While no single layer prevents all attacks, the combination significantly reduces both likelihood and impact.

Input validation reduces the attack surface by sanitizing and validating prompts before they reach the model. This won't catch everything, but it catches the obvious attempts.

Output filtering monitors model responses for signs of prompt injection success—unexpected data access, privilege escalation attempts, or instructions to perform unauthorized actions.

Privilege scoping limits the blast radius by giving AI agents the minimum permissions needed for their function. The Microsoft Copilot Studio breach succeeded partly because agents had excessive access to business systems.

Rate limiting and circuit breakers slow down and contain attacks by limiting how many actions an agent can take in a given timeframe and automatically stopping agents that exhibit suspicious behavior patterns.

Recent attacks demonstrate why multiple layers matter. Indirect prompt injection attacks now target common LLM data sources—poisoning the training data or context that models retrieve. A single defensive layer won't catch these sophisticated attacks; defense-in-depth provides redundancy when individual controls fail.

Pillar 3: Rapid Resolution Capability

Most security strategies fall short here. Defense-in-depth reduces risk, but attacks will succeed. The NCSC is clear about that. Organizations need automated resolution capability that operates at the tempo of modern threats, not the pace of manual review cycles.

Traditional AppSec workflows can't keep pace. According to industry benchmarks, organizations face 100,000+ vulnerability backlogs with a 252-day mean time to remediation. When AI agents execute thousands of operations per second and attackers exploit vulnerabilities hours after disclosure, waiting months for fixes creates unbounded incident response costs and SLA degradation.

Rapid resolution capability: automated incident response that contains breaches without waiting for human analysis. Security responses that keep pace with AI-driven threats instead of manual processes taking days or weeks. A key metric shift from "percentage of attacks prevented" to MTTR. When prevention is imperfect, speed of response determines impact.

A recent CrowdStrike survey found 76% of cybersecurity teams report struggling to keep pace with attacks that have increased in both volume and sophistication. The speed mismatch between AI-driven threats and manual security responses creates an untenable gap—one that only automated resolution can close.

The Manual Remediation Gap: Where Traditional AppSec Breaks Down

The NCSC's guidance makes clear that organizations must "reduce the impact" when prompt injection attacks succeed. But this is precisely where traditional AppSec workflows fail: they excel at detection, but remediation remains overwhelmingly manual.

Consider the typical enterprise security stack. According to industry research, the average organization deploys more than five security scanning tools—SAST, SCA, DAST, IAST, vulnerability scanners—creating comprehensive visibility into security risks across codebases. These tools generate thousands of findings daily. What they don't do is fix anything. This detection-resolution gap is why 81% of teams ship vulnerable code—not negligence, but capacity constraints.

The result: security debt accumulates faster than teams can address it—a productivity tax on both security and development teams that slows AI product launches. Now add AI systems to the equation. When new prompt injection attack vectors emerge through MCP sampling on a monthly basis, manual remediation becomes a structural bottleneck.

The gap is particularly acute because AI security requires specialized knowledge. Fixing prompt injection vulnerabilities isn't as simple as updating a dependency or patching a known CVE. It requires understanding how the specific LLM processes context and instructions, where prompt construction occurs in the application code, which input validation strategies work for your model architecture, and how to implement output filtering without breaking functionality.

AppSec teams aren't failing here. They're working with legacy constraints. Most SOCs weren't built for LLM-specific telemetry. The playbooks, training, and tooling that handle traditional vulnerabilities don't transfer directly to AI security challenges. Adding AI-specific issues to a backlog already stretched thin guarantees that critical vulnerabilities persist longer than risk tolerance allows.

The developer trust problem compounds the issue. When security tools generate findings that developers can't easily act on, trust erodes. Developers learn that generic suggested fixes rarely account for codebase-specific conventions, business logic, or security policies. They review the suggestion, find it doesn't work in their context, and the vulnerability remains unaddressed.

The manual remediation gap in one sentence: comprehensive detection capability without corresponding resolution capability. For AI security, where the NCSC explicitly acknowledges prevention limits, closing this gap determines whether you achieve resilient operations or remain stuck with persistent vulnerability exposure.

. If your detection operates at machine speed but your remediation operates at human speed, the resulting security debt becomes a structural bottleneck for AI product launches—and a compounding liability that grows with every deployment.

2026 AI Security KPIs to Track:

• Resolution Tempo Ratio: Time-to-detection vs time-to-remediation (target: <10:1)

• Developer Friction Score: Hours spent per developer per month on security-related rework

• AI Coverage Gap: % of AI deployments with full defensive stack vs partial/none

Closing the Gap: What Resolution Capability Looks Like

The manual remediation gap determines whether you achieve resilience or remain perpetually vulnerable. Organizations serious about AI security need to close it. Three characteristics matter:

Prioritization that matches reality. Not every vulnerability flagged by scanners represents actual risk. Effective resolution starts with determining which vulnerabilities are exploitable in your deployment context, distinguishing between theoretical attack vectors and code paths attackers can actually reach. This alone can eliminate the majority of noise that buries real issues.

Fixes that work in your environment. Generic suggested fixes fail because they don't understand codebase conventions, security policies, or business logic. Whether you're fixing prompt injection, input validation gaps, or privilege escalation paths, the fix needs to work in your code, not just in theory.

Speed that matches the threat. When attackers exploit new vulnerabilities within hours of disclosure, waiting weeks for manual fixes creates compounding exposure. Resolution capability means converting detection into action at a tempo that matches the threat landscape—and critically, at a pace that doesn't drag down your AI product roadmap.

The SOAR (Security Orchestration, Automation, and Response) market has grown for exactly this reason: security teams recognize that manual processes can't scale to match modern threat velocity. The same principle applies to vulnerability management—the question is whether your resolution capability can match your detection capability.

Practical Implementation: CISO Action Checklist

The strategic shift from prevention-only to resilience-first operations requires concrete action. Here's how to operationalize the new playbook in three phases:

Immediate Actions (Next 30 Days)

Visibility:

• [ ] Map all sanctioned AI tools (coding assistants, enterprise LLM deployments)

• [ ] Identify shadow AI (unapproved tools employees are using)

• [ ] Document privilege inheritance for each deployment (APIs, data access)

Quick Wins:

• [ ] Set risk acceptance criteria by AI use case (not all deployments need equal rigor)

• [ ] Document containment procedures (who does what, escalation paths)

• [ ] Assess your current time from vulnerability discovery to deployed fix

Shadow AI is particularly challenging—employees deploy AI tools without understanding security implications, creating risk surfaces you don't know exist. This AI governance gap is where 98% of organizations already operate without policies. Start here.

Strategic Initiatives (60-90 Days)

Governance:

• [ ] Establish a cross-functional AI Risk Council (Security, Legal, Privacy, Engineering)

• [ ] Define approval workflows for new AI deployments

• [ ] Create AI-specific risk classification criteria

• [ ] Set review cadence for AI security posture

Response Readiness:

• [ ] Establish MTTR targets for AI vulnerabilities by risk tier

• [ ] Run tabletop exercises for prompt injection scenarios

• [ ] Define communication protocols for AI security incidents

• [ ] Assess defensive controls across deployments (input validation, output filtering, rate limiting)

Ongoing Assessment

Resolution Tempo questions to revisit quarterly:

• What percentage of flagged vulnerabilities represent actual exploitable risk?

• Do developers trust and act on security findings, or deprioritize them?

• Can your current process handle a sudden spike in AI-related vulnerabilities?

• Is your remediation bottleneck costing developer productivity and delaying AI launches?

The organizations handling AI security most effectively have compressed the time from detection to resolution—through process improvements, tooling investments, or both. The metric that matters most isn't how many vulnerabilities you detect; it's how quickly you can resolve them.

Prevention + Resolution = Resilience

The NCSC's declaration that prompt injection may never be fully mitigated doesn't mean abandoning prevention. It means accepting prevention limits and building response capabilities to match.

The new CISO playbook for AI security recognizes this reality. You still implement input validation, output filtering, privilege scoping, and all the defensive layers that reduce attack likelihood. But you also accept that sophisticated attackers will eventually succeed—and you prepare accordingly with rapid detection, automated response, and remediation that operates at the tempo of modern threats.

Organizations that embrace this shift achieve true AI resilience: systems that resist attacks through defense-in-depth while responding effectively when defenses are breached. Prevention-only thinking promises perfect security while delivering growing vulnerability backlogs. The resilience approach accepts imperfect prevention and compensates with speed.

The parallel to SQL injection is instructive. The industry didn't solve SQL injection by making it impossible. We reduced its impact through better frameworks, prepared statements, parameterized queries, and—critically—rapid response when vulnerabilities are discovered. Organizations that adopted these practices achieved strong security outcomes despite the vulnerability's persistence.

For prompt injection, the path forward is similar. Layer your defenses, assume breach, and respond fast. The playbook is pragmatic security engineering applied to AI systems. Prevention reduces risk. Automated resolution manages the residual risk that prevention can't eliminate. Together, they create the resilience that AI-driven enterprises require.

What's your current time-to-resolution for security vulnerabilities, and how does your team plan to adapt as AI systems become more central to your operations?

Sources and Further Reading

Government Guidance

• Prompt Injection Can't Be Fully Mitigated, NCSC Says Reduce Impact Instead - Security Boulevard, Dec 12, 2025

• UK National Cyber Security Centre (NCSC) - LLM Security Guidance

Real-World Incidents

• Microsoft Copilot Studio Security Risk: How Simple Prompt Injection Leaked Credit Cards - Security Boulevard, Dec 11, 2025

• Gemini for Chrome Gets a Second AI Agent to Watch Over It - CSO Online, Dec 9, 2025

• Best of 2025: Indirect Prompt Injection Attacks Target Common LLM Data Sources - Security Boulevard, Dec 29, 2025

• New Prompt Injection Attack Vectors Through MCP Sampling - r/netsec, Dec 8, 2025

Industry Research

• CISOs Are Spending Big and Still Losing Ground - Help Net Security, Dec 8, 2025

• Survey: Cybersecurity Teams Struggling to Keep Pace in the Age of AI - Security Boulevard, Oct 2025

• From Agent2Agent Prompt Injection to Runtime Self-Defense - Security Boulevard, Dec 23, 2025

Related Content

• Top 5 Real-World AI Security Threats Revealed in 2025 - CSO Online, Dec 29, 2025