The Agentic AI Governance Gap: A Strategic Framework for 2026

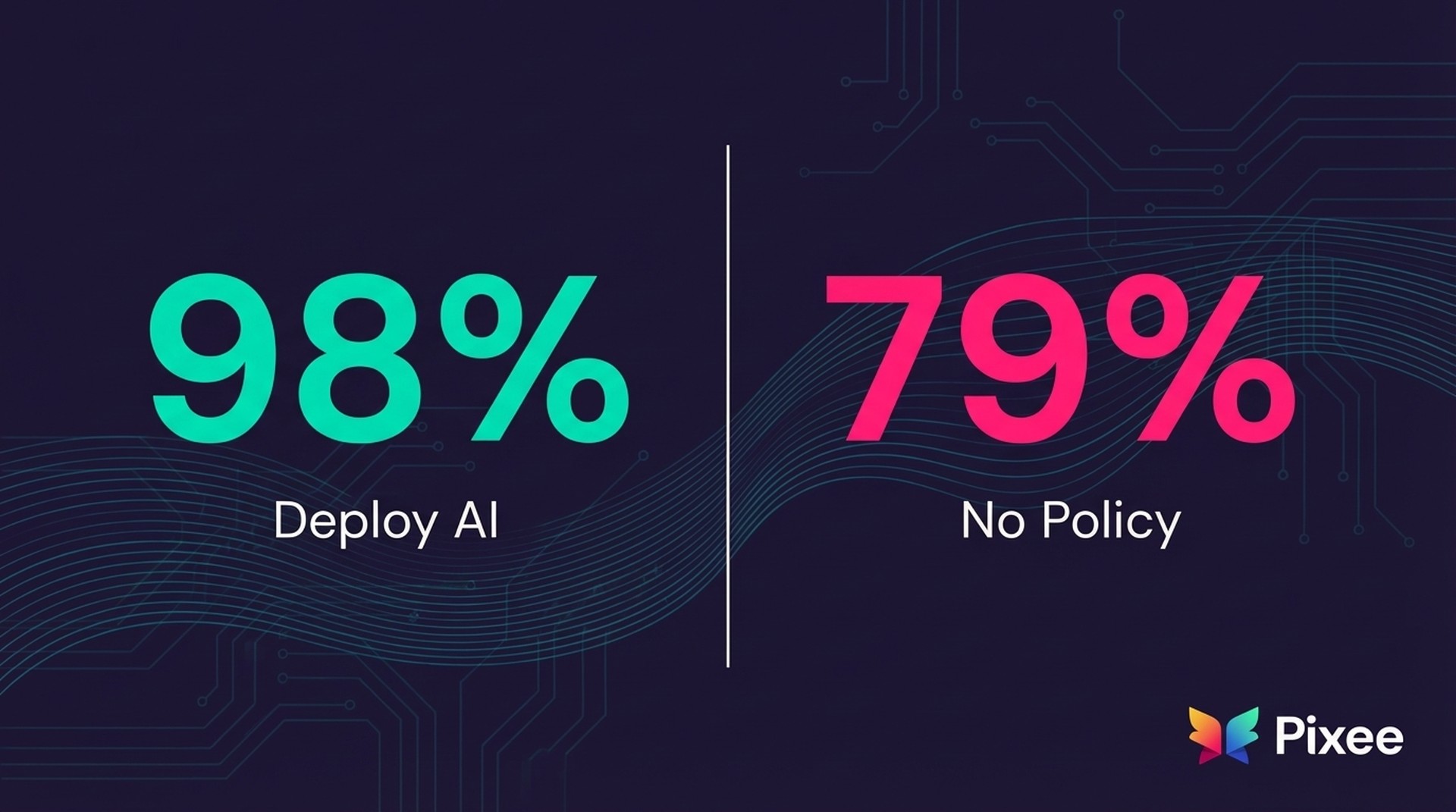

While 98% of enterprises are deploying agentic AI, 79% operate without formal security policies. This governance gap is creating a "Security Debt Trap" where AI-generated vulnerabilities accumulate 3x faster than human teams can remediate them.

I. The Strategic Risk Pivot

A December 2025 study from Enterprise Management Associates reveals the defining challenge for security leadership in 2026: 98% of organizations with 500+ employees are deploying agentic AI, yet 79% lack formal security policies for these autonomous tools.

Global 2000 organizations must move beyond "LLM chatbot" security and address Agentic Security—where software makes autonomous decisions, accesses production systems, and generates code at machine speed.

The financial stakes are clear: IBM research shows breaches involving ungoverned "shadow AI" carry a $670,000 cost premium over breaches involving sanctioned AI tools. This is a material liability that boards and regulators are beginning to scrutinize.

II. High-Impact AppSec Risks for G2000 Leadership

1. The Security Debt Trap

Recent analysis shows AI-generated code contains approximately 1.7x more defects than human-written code.

A typical organization managing 50 applications will accumulate 7,000 new vulnerabilities annually

IBM research confirms that 97% of organizations experiencing AI-related breaches lacked proper access controls. The governance gap directly correlates with breach risk.

2. The Identity Crisis (Non-Human Actors)

Traditional IAM cannot govern agents that operate at machine speed. The Real-World Attacks Behind OWASP Agentic AI Top 10 documents a rise in Agency Abuse, which is where attackers manipulate an agent's logic to grant itself permissions or exfiltrate data, bypassing traditional perimeter controls.

Documented exploits include:

• OpenAI Codex CLI: Remote code execution (CVSS 9.8) through malicious repository content

• Google Antigravity: Privilege escalation via manipulated prompts causing autonomous permission grants

• Claude Code: Prompt injection attacks causing systematic credential exfiltration

As CSO Online reports: "Without proper authentication, hijacked agents can cause widespread damage by tricking other agents."

The OWASP Top 10 for Agentic Applications highlights "Excessive Agency" and "Tool Misuse" as critical risks. Security researchers predict these will evolve into "AI agentic warfare" by 2026—autonomous agents conducting multi-step exploits faster than human defenders can respond.

III. Minimum Viable Governance (MVG) Framework

Aligned with NIST AI RMF and emerging EU AI Act requirements.

The Cloud Security Alliance found that only about one quarter of organizations have comprehensive AI security governance in place. The following framework provides a practical starting point:

Control 1: Continuous Discovery (Visibility)

Inventory all AI coding assistants and map data access patterns. You cannot secure what you cannot see. Discover shadow AI through network monitoring, identify what code and credentials each tool can access, and implement continuous discovery as AI tools evolve.

Control 2: IDE-Integrated Scanning (Velocity)

Move security analysis "left" into the IDE. Security must be invisible to the developer's workflow but visible to the CISO. Real-time vulnerability detection catches flaws before they reach the main branch—not days later in a scheduled security review.

Control 3: Automated Remediation Loop (Scaling)

Adopt automated fix generation to match the 17.5/month vulnerability arrival rate. Shift your team from writing patches to approving them. When fix proposals arrive pre-written and pre-tested, remediation timelines compress from weeks to hours.

Control 4: Policy-as-Code (Compliance)

Maintain immutable audit trails of AI-generated code to satisfy regulatory reporting and forensic requirements. Use policy-as-code approaches to enforce governance automatically rather than through manual review. As AI tools gain new capabilities, your governance controls must evolve.

Executive 90-Day Priority Checklist

Research confirms that governance maturity defines enterprise AI confidence. Organizations with comprehensive AI security governance report feeling prepared for AI-driven threats; those without it express uncertainty and concern.

The ROI calculation is straightforward:

• Cost of inaction: $670K breach premium + ~7,000 annual vulnerability accumulation + regulatory exposure

• Cost of governance: Fraction of a single breach, with compound risk reduction over time

Sources and Further Reading

Governance Research

• CSA Study: Mature AI Governance Translates Into Responsible AI Adoption - Security Boulevard, Dec 19, 2025

• Governance Maturity Defines Enterprise AI Confidence - Help Net Security, Dec 24, 2025

• EMA Study: "Agentic AI Identities: The Unsecured Frontier of Autonomous Operations" - Dec 2, 2025

AI Adoption and Deployment

• Agentic AI Breaks Out of the Lab and Forces Enterprises to Grow Up - SD Times, Dec 30, 2025

• Agentic AI Already Hinting at Cybersecurity's Pending Identity Crisis - CSO Online, Dec 23, 2025

• The Real-World Attacks Behind OWASP Agentic AI Top 10 - BleepingComputer, Dec 29, 2025

AI-Generated Code Security

• Analysis Surfaces Rising Wave of Software Defects Traced to AI Coding Tools - DevOps.com

• Surprise! Everybody Uses AI Tools for Software Development, Few Do So Securely - DevOps.com

• LLM Vulnerability Patching Skills Remain Limited - Help Net Security, Dec 11, 2025

• LLMs Can Assist with Vulnerability Scoring, But Context Still Matters - Help Net Security, Dec 26, 2025

Related Content

• Edge Security Is Not Enough: Why Agentic AI Moves the Risk Inside Your APIs - Security Boulevard, Dec 30, 2025

• The ROI of Agentic AI AppSec - Checkmarx, Dec 29, 2025