Why AI Can't Audit Its Own Code: Replit's Research on Deterministic Security

Replit just published research proving something security engineers already suspected: AI can't reliably audit its own code.

Their team tested "security scans" across vibe coding platforms—the AI-powered development environments promising full-stack applications in minutes. Here's what they found:

Dependency vulnerabilities: 0% detection by AI-only scanners. Not low detection. Zero.

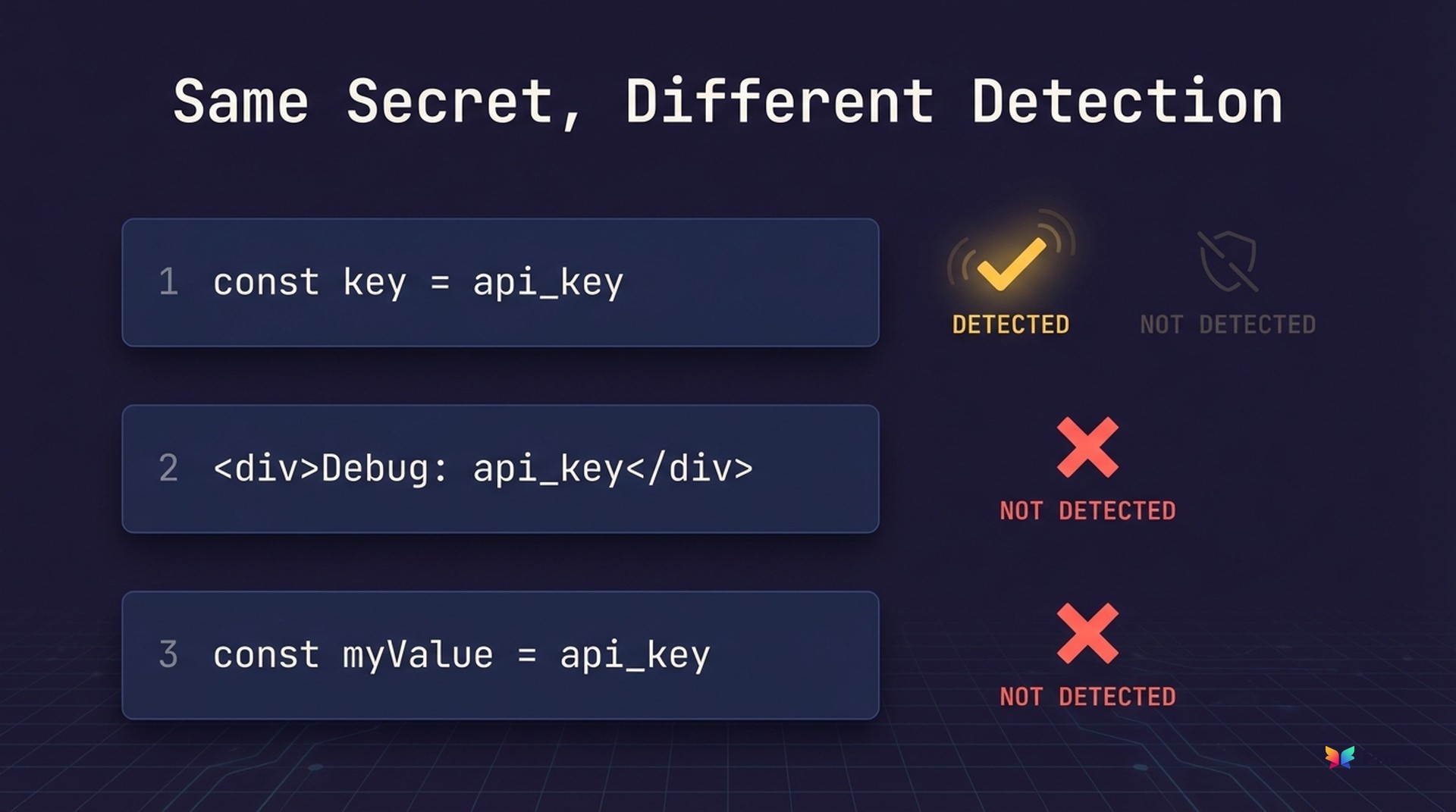

Secrets embedded in JSX literals: Missed entirely. Move that same secret to a variable assignment? Sometimes detected. Change the variable name from key to myValue? Gone again.

Same code, same scanner, 10 runs: Different results each time.

That last finding is the architectural problem. Security scanning needs to be deterministic. When the same input produces different outputs, you don't have a security tool—you have a slot machine.

The Nondeterminism Problem

Large language models analyze code through interpretation, not structural pattern matching. They judge via statistical associations, perceived intent, and natural language cues rather than deterministic rules.

In most contexts, non-determinism works fine. For security scanning, it's a fundamental limitation.

Replit's experiments tested code variants that were functionally identical but syntactically different:

Identical vulnerability. Same security risk. Different detection outcomes based on syntax that has nothing to do with exploitability.

Traditional static analysis tools—SAST scanners like CodeQL, Semgrep, or SonarQube—operate differently. They parse source code into an abstract syntax tree and match patterns against structural rules. Same code, same rule set, same findings. Every time. Regardless of variable names, formatting, or how "obvious" the vulnerability appears.

This determinism isn't just convenient—it's what makes security scanning auditable. When a deterministic scanner makes a mistake, you can trace the exact rule logic that failed and fix it. When an AI scanner makes a mistake, you're debugging statistical inference.

Third-party validation confirms what practitioners have observed: production AI systems making security decisions require validation that most "AI-powered" tools skip entirely.

What AI-Only Scanners Can't See

Replit's research identified three specific failure modes where AI-only scanning breaks down.

Dependency Vulnerabilities: A Complete Blind Spot

AI-only scanners detected 0% of dependency CVEs in Replit's tests.

This isn't surprising. Identifying a vulnerable dependency requires:

- Parsing the lockfile to identify exact package versions

- Querying a vulnerability database (NVD, GitHub Advisory, OSV) in real-time

- Matching version ranges to affected versions

- Determining whether the vulnerable code path is reachable in your application

None of this is interpretive. It's database lookup and version comparison—exactly what deterministic tools excel at and LLMs can't reliably perform.

Replit tested this specifically with CVE-2024-24558, a HIGH-severity cross-site scripting vulnerability in @tanstack/react-query-next-experimental@5.17.0. The AI-only scanners didn't flag it. They couldn't—the vulnerability exists in a version range documented in a database the LLM has no real-time access to.

77% of your application code comes from dependencies you didn't write. If your security scanning can't see dependency vulnerabilities, it's missing the majority of your attack surface.

Syntax Sensitivity: When Detection Depends on Formatting

Embedding secrets directly into JSX literal text bypassed AI-only scanners entirely. The same secret stored in a variable assignment was sometimes detected.

This happens because LLMs detect secrets through pattern recognition of what "looks like" a credential. A variable named key or api_key triggers the association. A raw string inside JSX markup doesn't.

Deterministic secret scanners use different approaches: entropy analysis (high-randomness strings), pattern matching (known credential formats like AWS keys), and structural rules (assignments to variables matching specific names). These methods catch secrets regardless of where they appear in code—variable, literal, configuration file, or comment.

Developers don't always follow the patterns LLMs expect. Debugging code, rapid prototyping, copy-paste from documentation—these real-world scenarios produce code that doesn't match the "tidy" patterns in LLM training data.

Prompt Sensitivity: Coverage That Depends on Asking

Replit's research revealed that AI-only scanner coverage depends heavily on prompt phrasing. When explicitly instructed to "analyze the code for XSS and unsafe HTML rendering," the scanners sometimes found dangerouslySetInnerHTML usage. Under generic prompts like "review this project for security issues," detection became inconsistent.

This creates a structural problem: AI-only scanner coverage is bounded by what the user already knows to ask about. Security scanning should find the vulnerabilities you don't know to look for. That's the point.

Deterministic tools don't have this limitation. Their coverage is defined by their rule set, applied uniformly to all code. You don't need to know about every vulnerability class to detect them—the scanner's rules do.

This Isn't Just Replit's Finding

Replit's research aligns with multiple independent studies reaching the same conclusion.

In January 2026, Tenzai tested five major AI coding platforms and identified 69 vulnerabilities across them. Their finding: AI coding assistants avoid basic security mistakes (the patterns in their training data) but fail at business logic vulnerabilities and context-dependent security issues.

CodeRabbit's December 2025 research analyzed 470 pull requests and found AI-assisted code contains 2.74× more security vulnerabilities than human-only code. The vulnerability patterns cluster around validation and authorization—exactly the areas requiring context outside the immediate code being written.

OX Security captured the dynamic perfectly: AI coding assistants operate like an "army of juniors." They're fast, tireless, and produce syntactically correct code. But they share the junior developer's blind spot—optimizing for the problem they can see, not the security implications they can't access.

The pattern is consistent: AI excels at generating code that solves the immediate problem. AI fails at security analysis requiring exhaustive coverage, version-specific database lookups, or context beyond the code being analyzed.

What Deterministic Tools Get Right

Replit's research makes the counter-case clearly. Across all their code variants—secrets in literals, secrets in variables, renamed variables, XSS via dangerouslySetInnerHTML—deterministic scanners consistently identified the same class of issue.

Why? Because deterministic analysis operates on structural rules, not interpretation:

SAST (Static Application Security Testing): Parses code into an abstract syntax tree. Matches patterns against known vulnerability signatures. A SQL injection is a SQL injection whether the variable is named query, sql, or bananaPhone. The scanner sees the structure, not the name.

SCA (Software Composition Analysis): Reads lockfiles. Queries vulnerability databases. Compares version ranges. No interpretation required—either your version is in the affected range or it isn't.

Secret Detection: Combines entropy analysis (statistically random strings), pattern matching (known formats like AWS access keys), and structural rules (assignments to variables matching known patterns). Catches secrets regardless of syntax.

These tools are deterministic by design. Same input, same output, every time. That's what makes security scanning auditable, testable, and trustworthy.

The three intelligence types needed for effective triage—false positive elimination, business context application, and risk re-scoring—all require deterministic foundations. You can't build consistent triage on stochastic detection.

Where AI Actually Belongs in Security

Replit's conclusion isn't "don't use AI for security." It's more nuanced: LLMs are best used as remediation assistants, not security oracles.

This maps to a hybrid architecture:

Deterministic tools establish the baseline. SAST, SCA, DAST, and secret detection provide consistent, auditable, exhaustive coverage. They find the vulnerabilities—all of them, every time, regardless of syntax.

AI augments with remediation and reasoning. Once you know what's actually vulnerable, AI excels at generating fixes, understanding intent, and reasoning about business logic. These are interpretive tasks where stochastic systems add value.

The order matters. Detection must be deterministic to be trustworthy. Remediation can be probabilistic because humans review the output before it ships.

This is the architecture Replit advocates. It's also what we've built at Pixee—aggregating findings from 10+ deterministic scanners for consistent triage, then applying AI to research exploitability and generate fixes developers actually merge (76% merge rate vs. industry 40%). Not replacing detection with AI. Augmenting triage and remediation with AI.

The Vibe Coding Security Gap

Vibe coding isn't going away. AI-powered development environments are faster, more accessible, and genuinely productive for many use cases. Adoption won't slow down.

But the security model for AI-generated code can't be "ask the AI if its code is secure." That's asking the same system to both generate and validate—a conflict of interest at minimum, and architecturally unsound regardless.

Developers using vibe coding platforms deserve security tools that don't hallucinate. That means:

- Deterministic detection for baseline security guarantees

- Real-time vulnerability database access for dependency CVEs

- Structural analysis that doesn't depend on variable names or syntax choices

- AI-assisted remediation for generating fixes—not finding vulnerabilities

The platforms that get this right will separate from the pack. The ones promising "AI security scans" without deterministic foundations will leave their users exposed to the exact vulnerabilities Replit documented.

Replit's full research is available at securing-ai-generated-code.replit.app. The methodology section is worth reading if you're evaluating AI-powered security tools.

Sources:

- Feng, D. (2026). "Securing AI-Generated Code: Why Deterministic Tools Must Establish the Baseline." Replit Agent Infrastructure.

- CodeRabbit State of AI vs Human Code Generation Report (2025)

- OX Security "Army of Juniors" Research (2025)

- Tenzai AI Coding Platform Security Assessment (2026)