Why Purpose-Built Security Remediation Produces Higher Quality Fixes

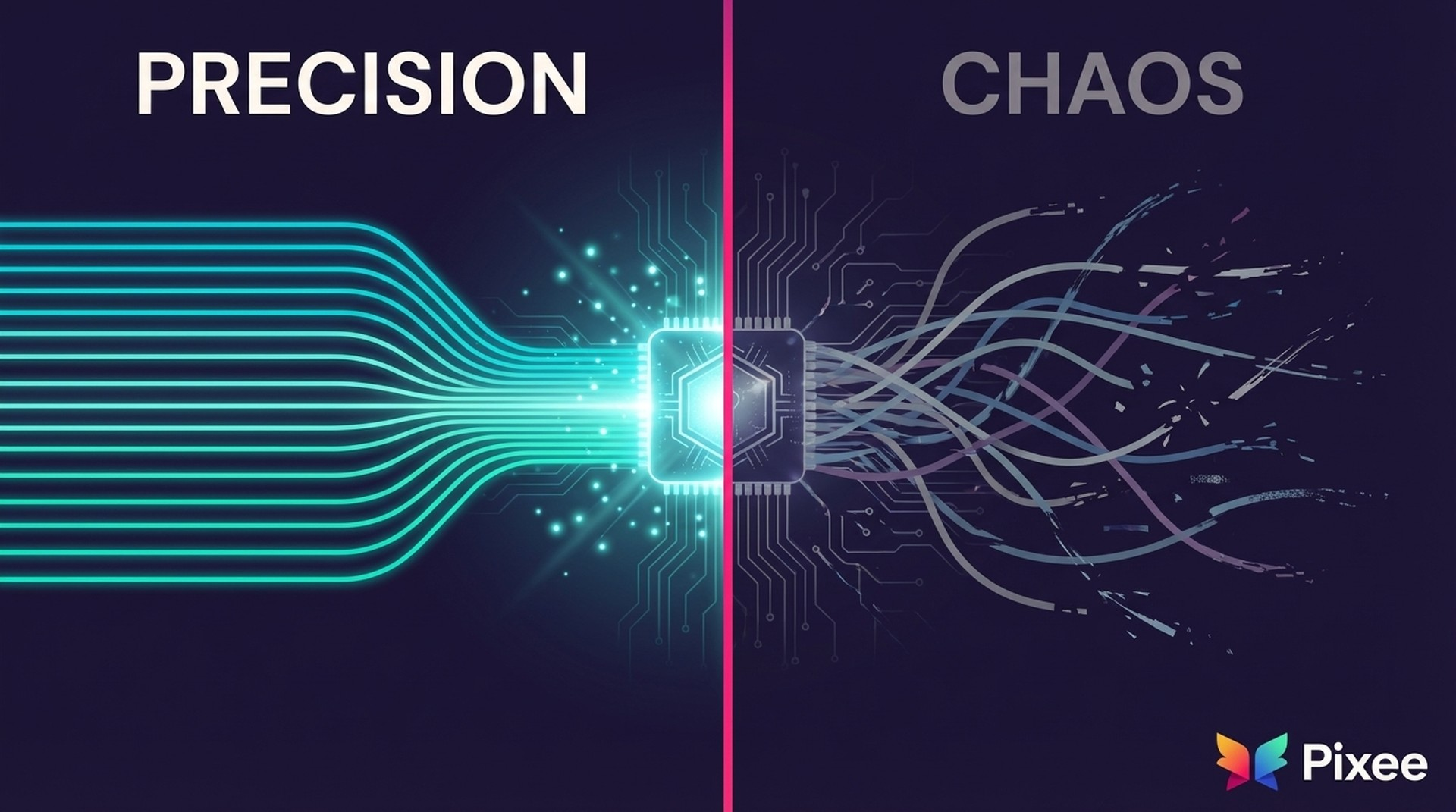

Generic AI can write code that compiles. Purpose-built security remediation produces code that ships.

The difference isn't intelligence. It's architecture. And in the context of DevSecOps, that architectural difference determines whether vulnerability triage and remediation actually reduce your security backlog or just create more noise.

When you ask ChatGPT or GitHub Copilot to fix a security vulnerability, you get a plausible solution. It might look reasonable. It might even work in isolation. But developers consistently reject these fixes because they don't fit the codebase: wrong patterns, missing context, unfamiliar conventions, simply unnecessary etc.

Purpose-built remediation takes a fundamentally different approach. It starts with the principle of constrained generation, meaning fixes are architected using proven security patterns combined with deep codebase context. The result are solutions that feel native to your codebase because they're built from your existing patterns.

One way to measure this difference is in the merge rate of AI generated fixes by developers. At Pixee for example, across thousands of PRs we have historically seen 76% merge rates across customers while generic AI tools hover below 20%. But the number isn't the point. The point is the design decisions that we believe all AppSec teams should make regardless of tools or tech stack in order to prioritize reliability over creativity in AI-generated fixes.

This fundamental requirement is not just a product of our experience building purpose built security tools. Our benchmarks show how purpose-built AI systems outperform generic alternatives on vulnerability classification. And it aligns with trends and realities of AI-generated code more broadly. Recent research from CodeRabbit examining hundreds of open source repositories for example found a consistent pattern: AI-generated code often looks fine on the surface, but problems emerge when actual review begins. The issues aren't obvious syntax errors. They're subtle mismatches between what the AI produced and what the codebase actually needs.

For security fixes, this problem compounds. A "creative" solution to a SQL injection vulnerability might introduce new attack surfaces. A clever input validation approach might break legitimate use cases. Generic AI optimizes for plausible code. Security requires proven patterns.

Why Generic AI Struggles with Security Fixes

ChatGPT and GitHub Copilot excel at general programming tasks. They can scaffold boilerplate, suggest completions, and even write tests. But security automation architecture for vulnerability remediation isn't a general programming task.

When you ask generic AI to fix a vulnerability, it faces an impossible information gap. It doesn't know:

• Your validation libraries. Every mature codebase has established patterns for input validation, output encoding, and authentication checks. Generic AI doesn't know you already have a sanitizeInput() function that handles your specific encoding requirements.

• Your error handling conventions. Does your codebase throw exceptions, return error codes, or use Result types? Generic AI will guess, and it'll often guess wrong.

• Your security policies. Maybe your organization requires specific cryptographic implementations for compliance. Maybe certain frameworks are approved while others aren't. Generic AI has no access to this context.

The result is "creative" fixes that may or may not work at scale. Combined with the tool sprawl and false positive overload that security teams already face, generic AI fixes often add noise rather than reduce it.

Consider what happened with Microsoft Copilot Studio in late 2025. Security researchers demonstrated how simple prompt injection attacks could leak sensitive data and execute unauthorized actions. The system produced plausible-looking outputs that violated fundamental security boundaries. Generic AI optimizing for helpfulness became a vulnerability vector. (This dynamic is part of a broader pattern with AI-generated code.)

This isn't a criticism of these tools. They're excellent at what they're designed for. But security remediation requires a different architecture entirely.

How Purpose-Built Remediation Works

High-quality fixes don't emerge from a single clever algorithm. They emerge from a multi-layer validation architecture where most fixes are rejected before developers ever see them.

The goal is transforming the developer experience from "author" to "reviewer." Instead of spending six hours implementing a SQL injection fix from scratch, developers spend five minutes reviewing a fix that already matches their codebase patterns.

Three layers make this possible.

Layer 1: Constrained Generation

The first gate happens during fix generation itself. The AI receives only what it needs to produce a targeted fix:

• Security-relevant code context (not the entire codebase)

• Canonical remediation patterns from OWASP, SANS, and CWE

• Your codebase's existing validation functions, import structures, and coding conventions

• Explicit constraints against behavioral changes

Thus when remediating a path traversal vulnerability, the system doesn't invent new sanitization methods. It applies patterns vetted across millions of applications, adapted to match how your team already writes code.

Layer 2: The Fix Evaluation Agent

Here's where specialized security tools fundamentally diverge from generic AI.

A separate AI inference call (with entirely different context) validates each generated fix against a multi-dimensional quality rubric:

Safety validation ensures no behavioral changes except fixing the vulnerability:

• No breaking API changes

• Preserved business logic

• Maintained backward compatibility

• No unintended side effects

Effectiveness validation confirms the fix actually addresses the security issue:

• Correctly remediates the vulnerability type

• Complete fix without requiring manual refinement

• Uses appropriate security controls

• Validates against known attack patterns

Cleanliness validation checks that the fix matches your standards:

• Proper formatting and indentation

• No extraneous changes

• Matches your coding conventions

• Clear, maintainable code

The system automatically rejects fixes failing ANY threshold. Developers never see them. This pre-filtering means the fixes that do reach developer review have already passed rigorous automated validation.

Generic AI tools skip this entire layer. They generate code, maybe run a linter, and call it done. Specialized systems treat evaluation as equally important as generation.

Layer 3: Your Existing Controls

The final layer leverages your existing development infrastructure:

• PR-only workflow ensures no direct commits to protected branches

• Your code review processes still apply (automation augments, not replaces)

• Your CI/CD test suites validate the changes

• Your SAST tools re-scan the proposed fixes

• Standard Git rollback remains available

• Full audit trail supports compliance requirements

This layered approach means automated remediation operates within your existing security controls, not around them.

Developer Trust: The Real Quality Metric

Merge rate isn't just a metric. It's a proxy for developer trust accumulated over time.

Consider what happens when developers encounter automated PRs:

Day 1: Skepticism. "Another tool generating fixes. Let's see how bad these are."

Week 2: Evaluation. "Okay, three out of four PRs were actually good. One needed minor tweaks."

Month 2: Trust. "These fixes match how we write code. I can review instead of rewrite."

Month 6: Integration. "This is just how we handle security findings now."

This sustained trust manifests across the adoption curve, not just initial novelty. Developers continue accepting fixes because the quality remains consistent.

Contrast this with the dependency update experience many teams know too well. Automated PRs flood in with changes that "should" be safe but often break builds, introduce subtle incompatibilities, or require significant testing. After enough noise, developers start auto-closing these PRs without review.

This is the "poisoned well" problem in action. One bad automated fix doesn't just get rejected. It contaminates developer perception of all future automated fixes. Teams that experience even a few breaking changes from automation often disable the tooling entirely.

The teams that successfully eliminate their security backlog do so by building developer trust through consistent quality, not by generating more automated noise.

Evaluating Automated Vulnerability Remediation

If you're evaluating automated vulnerability remediation, stop measuring "fixes generated" and start measuring "fixes merged."

One way to do this is to run automated remediation on a single repository for 30 days. Track:

• Total PRs generated (the number everyone likes to report)

• PRs merged without changes (actual trust signal)

• PRs merged with minor edits (acceptable friction)

• PRs rejected or ignored (the real failure rate)

If you go down this path, let me know what you find.