The AI Remediation Imperative: Why Detection Isn't Enough for OWASP Agentic AI in 2026

The 39C3 Wake-Up Call

On December 31, 2025, security researcher Johan Carlsson took the stage at the 39th Chaos Communication Congress in Hamburg to present "Agentic ProbLLMs: Exploiting AI Computer-Use and Coding Agents." One example he talked about was the Ray AI Framework breach.

This breach exposed over 230,000 compromised clusters. It happened when attackers used AI-generated code to spread malware and exfiltrate data from vulnerable servers. This is just one newsworthy example of many similar attacks. As OWASP researchers note, many organizations are "already exposed to Agentic AI attacks, often without realizing that agents are running in their environments."

The OWASP Framework: 10 Risks, One Shared Requirement

Developed by over 100 industry experts and reviewed by NIST and the European Commission, the OWASP Top 10 for Agentic Applications 2026 identifies critical vulnerabilities in autonomous AI systems that can help serve as a starting point.

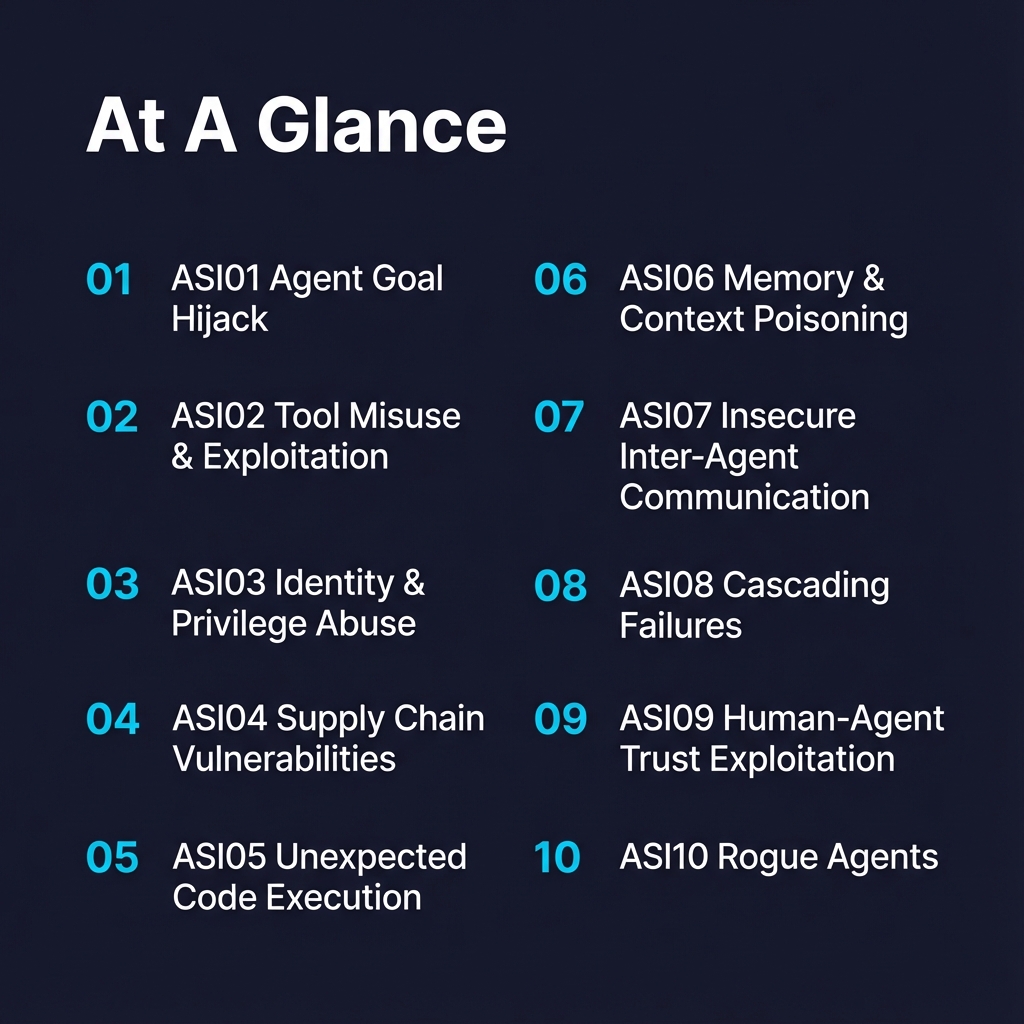

The framework identifies ten critical risks:

Out of the ten, three stand out for their combination of exploitability and potential impact:

ASI08 - Cascading Failures: A single compromise or defect amplifies across interconnected agents and workflows. When one agent is exploited, the breach spreads rapidly through your entire agent ecosystem, making containment extraordinarily difficult.

ASI03 - Identity and Privilege Abuse: AI agents often inherit excessive privileges from their deployment context. This creates a direct path for attackers to gain widespread unauthorized access—the Ray Framework breach being a prime example.

ASI01 - Agent Goal Hijack: This sophisticated form of prompt injection allows attackers to silently redirect agent actions without obvious indicators of compromise. Your agent thinks it's following instructions; it's actually exfiltrating data or manipulating systems.

Each of these risks manifests as code-level vulnerabilities in your environment. They're exploitable, they're being weaponized in production, and they require fixes at the source code level in your repositories. Detection tools—SAST, SCA, DAST—can identify where these risks exist in your codebase. What they can't do is remediate them at scale.

What Agentic AI Breaks That We Haven't Admitted Yet

The OWASP framework identifies the vulnerabilities. But the deeper problem is that agentic AI invalidates assumptions embedded in your existing AppSec operating model—assumptions that were reasonable for human-written code but fail catastrophically when agents operate autonomously.

Threat Modeling Collapses Under Probabilistic Execution. Traditional threat models assume deterministic execution paths: "If the user submits X, the system does Y." But when an AI agent interprets natural language instructions and autonomously decides how to accomplish a goal, execution paths become probabilistic. You can't enumerate attack surfaces that emerge from agent interpretation variance. Your STRIDE analysis, your data flow diagrams, your attack trees—they all assume you know what the code will do. With agents, you know what you told it to accomplish, not how it will execute.

Ownership Models Fail When "The Actor" Isn't a Team. Enterprise AppSec relies on ownership: this service belongs to that team, who are accountable for its security posture. But who owns an AI agent's security decisions? The platform team that deployed the agent framework? The product team that wrote the prompt? The security team that approved the integration? When an agent inherits privileges from its deployment context (ASI03), there's no clear owner because the agent's behavior emerges from the intersection of multiple teams' decisions. Your escalation paths, your RACI matrices, your accountability frameworks—they all assume human actors with clear reporting lines.

Compensating Controls Become Lagging Indicators. Traditional defense-in-depth relies on edge controls: WAFs, API gateways, network segmentation. But agentic AI moves risk inside your APIs, where edge controls can't see it. When an agent with legitimate API credentials is hijacked through prompt injection (ASI01), your WAF sees authorized traffic. Your API gateway sees valid tokens. Your SIEM sees normal behavior patterns. The attack is indistinguishable from legitimate agent operation until data exfiltration is already underway. By the time your compensating controls detect the problem, the breach has occurred.

These aren't theoretical concerns. They're operational realities that force a fundamental question: If your existing AppSec operating model assumes deterministic code, clear ownership, and edge-based controls—and agentic AI invalidates all three assumptions—what does an AppSec program designed for autonomous systems actually look like?

The Remediation Bottleneck: Where Traditional AppSec Breaks Down

The vulnerability discovery problem is already overwhelming AppSec teams. Over 40,000 CVEs were published in 2024, and that number continues to climb. The median enterprise already struggles with 100,000+ vulnerability backlogs—a security debt spiral where fixes take 6+ months on average.

Now add AI-generated code to the equation.

Consider the recent timeline of AI security incidents:

• October 2025: CamoLeak vulnerability in GitHub Copilot exposes private source code

• November 2025: Microsoft patches vulnerabilities in AI coding tools as part of addressing over 1,100 security flaws in 2025

• December 2025: Ray AI Framework exploitation reveals 230,000+ compromised clusters

AI is accelerating vulnerability discovery—Stanford's ARTEMIS agent finds vulnerabilities at $18/hour. But faster discovery without remediation capability just means backlogs arrive faster. Research on "LLM vulnerability patching skills" confirms current language models "remain limited" in generating quality patches. Generic AI-suggested fixes achieve merge rates below 20% because developers don't trust code that doesn't understand their conventions, policies, or architectural patterns.

Meanwhile, a Wiz benchmark study found that CISOs are "spending big and still losing ground." Increased budgets aren't translating to improved security outcomes because the fundamental bottleneck remains: manual remediation simply cannot scale to match the volume and velocity of vulnerability discovery.

Why the OWASP Risks Require Automated Remediation

The fundamental challenge is velocity. AI agents operate autonomously and introduce vulnerabilities continuously, while AppSec teams triage and remediate manually over days or weeks. Traditional detection-then-manual-fix workflows can't keep pace with autonomous systems that make security-critical decisions faster than humans can review them.

Each OWASP risk manifests differently across codebases, making context crucial. ASI01 (Agent Goal Hijack) might require input validation in one service and sanitization logic in another. ASI03 (Identity and Privilege Abuse) could need least-privilege enforcement in authentication layers, permission scoping in authorization modules, or credential rotation in deployment configurations. There's no universal patch.

The trust paradox compounds the problem. Developers maintain low merge rates for AI-generated code precisely because they've learned that generic fixes—whether from traditional static analysis tools or AI assistants—rarely account for their specific code conventions, business logic, or security policies. As one analysis noted, "Edge security is not enough: When agentic AI moves the risk inside your APIs." The threats aren't just at the perimeter; they're embedded in how agents interact with your application code."

Remediation Boundaries: When Not to Automate

Not all remediation should be automated. Enterprise AppSec leaders need to distinguish between safe, high-confidence automation and changes that require human judgment. Here's how to think about remediation boundaries:

Remediation TypeAutomation ViabilityRationaleInput sanitizationHigh - Should automateDeterministic, well-defined patterns, testable outcomes, limited blast radiusPermission scopingHigh - With policy constraintsLeast-privilege principles are codifiable, but require org-specific IAM policiesDependency updatesMedium - With validation gatesVersion compatibility can be tested, but requires regression verificationAuthentication logicLow - Rarely automateHigh blast radius, complex business context, critical security boundaryBusiness rule enforcementLow - Human review requiredDomain-specific risk, requires business stakeholder input, often tied to compliance

This distinction is critical. A credible automated remediation system must recognize its boundaries and escalate appropriately. The goal isn't to remove humans from the loop—it's to remove humans from the 80% of remediation work that's mechanical, testable, and policy-driven, so they can focus on the 20% that requires architectural judgment or business context.

What Credible Automated Remediation Requires

Before evaluating any automated remediation solution, AppSec leaders should establish non-negotiable requirements. Based on enterprise deployment patterns and failure modes observed across implementations, here's what a production-grade system must provide:

Deterministic Diffs: Every change must be reviewable, reversible, and attributable. No "trust the model" black boxes.

Policy-Bounded Actions: Remediation must respect org-specific security policies, compliance requirements, and architectural standards. The system should fail closed when policies conflict.

Measurable Blast Radius: Before any change is proposed, the system must quantify what breaks if the fix is wrong—test coverage, dependent services, API contracts.

Human Veto Points: Critical paths (auth, payments, PII handling) should have mandatory human review, regardless of confidence scores.

Context Preservation: Fixes must account for your codebase's existing patterns, not impose external conventions that reduce maintainability.

With these requirements defined, let's examine how they can be operationalized.

The Resolution Center: Operationalizing OWASP Guidance at Scale

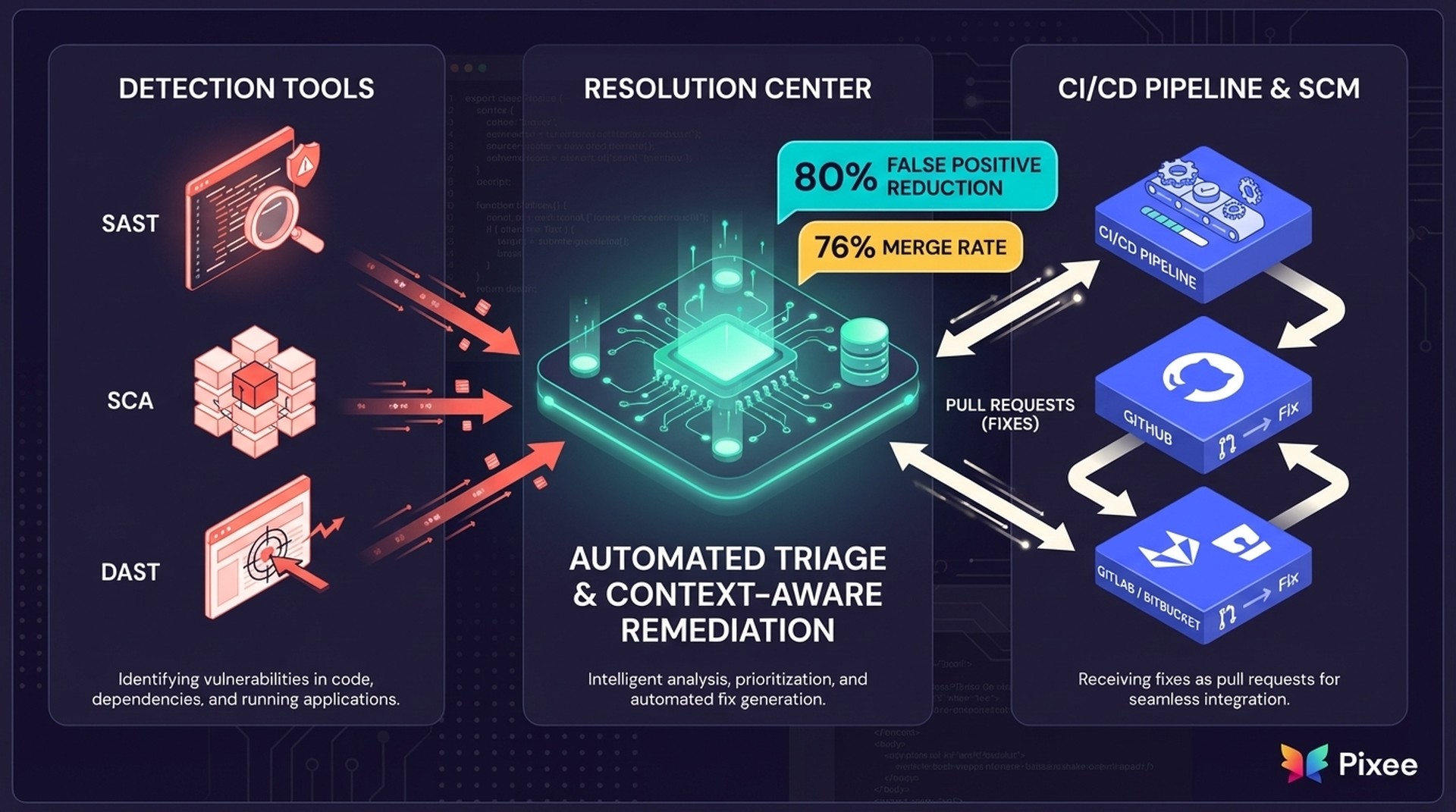

The approach we've taken at Pixee to this problem space is to build a comprehensive resolution center—an AppSec platform that integrates directly into your CI/CD pipeline and source control management (GitHub, GitLab, Bitbucket) to automate both triage (eliminating 80% of false positives) and automated remediation (generating context-aware fixes).

Here's how that might work in practice:

For ASI01 (Agent Goal Hijack): Automated input validation enforcement, parameterized query generation, and sanitization logic that matches your codebase patterns. Instead of flagging vulnerable code and waiting for developer action, the system generates the specific fix that prevents prompt injection—accounting for your framework, libraries, and coding standards.

For ASI03 (Identity and Privilege Abuse): Least-privilege enforcement through automated permission scoping, credential rotation patterns, and access control implementations. When an agent inherits excessive privileges, the resolution center identifies the specific over-privileged calls and generates the minimal-permission alternative.

For ASI08 (Cascading Failures): Isolation pattern implementation, circuit breaker logic, and failure containment code that prevents a single compromised agent from spreading laterally. This includes timeout enforcement, rate limiting, and bounded retry logic—all generated to fit your specific agent architecture.

Practical Implementation: 3 Steps for AppSec Leaders

Operationalizing the OWASP Agentic AI Top 10 requires strategic planning and systematic execution. Here's how AppSec leaders can move from awareness to action:

Step 1: Assess Your Agentic AI Exposure

Many organizations don't realize where AI agents are deployed in their environment. Start with a comprehensive audit:

• Map all AI agent deployments: Development tools (Copilot, Tabnine, Cody), autonomous agents in production, AI-assisted security tools

• Identify privilege inheritance patterns: What permissions do these agents inherit? What APIs can they access? What data can they reach?

• Correlate to OWASP risks: For each deployment, identify which of the 10 risks apply and what attack surface they create

This isn't a one-time exercise. As the research notes, "AI adoption is outpacing security controls" with many teams discovering agent deployments they didn't authorize or even know existed.

Step 2: Prioritize the Top 3 Risks

Not all risks are equally critical for your environment. Focus initial efforts on the highest-impact, easiest-to-exploit risks: ASI08 (Cascading Failures), ASI03 (Identity Abuse), and ASI01 (Goal Hijack).

For each priority risk:

• Map code-level manifestations: Where does this risk appear in your codebase? What makes it exploitable in your specific environment?

• Baseline your metrics: Current backlog size, mean time to remediation, false positive rate

• Define success criteria: What does "fixed" look like? How will you validate it?

Starting with code-level vulnerabilities provides measurable progress. You can track vulnerabilities found, vulnerabilities remediated, and time to resolution—metrics that demonstrate security improvement.

Step 3: Implement Automated Remediation

The resolution center approach requires two capabilities working in concert:

Triage Automation: Reduce false positives through reachability analysis. Not every vulnerability flagged by SAST is exploitable in your specific deployment context. Automated triage uses program analysis to determine which vulnerabilities are actually reachable by attacker-controlled inputs, eliminating 80% of false positives and allowing your team to focus on real risks.

Remediation Automation: Generate context-aware fixes at scale. This means fixes that understand your codebase conventions, security policies, and architectural patterns—resulting in high merge rates because developers trust the quality.

Continuous Validation: Ensure fixes maintain security over time. As code evolves, automated systems re-verify that remediation patterns remain effective, catching regressions or introducing additional protections as new attack vectors emerge.

From Framework to Practice

The OWASP Top 10 for Agentic Applications 2026 defines the threat landscape. As one industry analysis put it: "Agentic AI breaks out of the lab and forces enterprises to grow up."

The 39C3 presentation, the Ray Framework breach, and the daily stream of AI security incidents confirm how front and center agentic security concerns are and why OWASP just published their new agentic framework.