Machine-Speed Triage: The Three Intelligence Types Security Needs Now

Detection Isn't Your Problem. Triage Is.

If you run AppSec today, you already know the uncomfortable truth: detection isn't the bottleneck. Triage is.

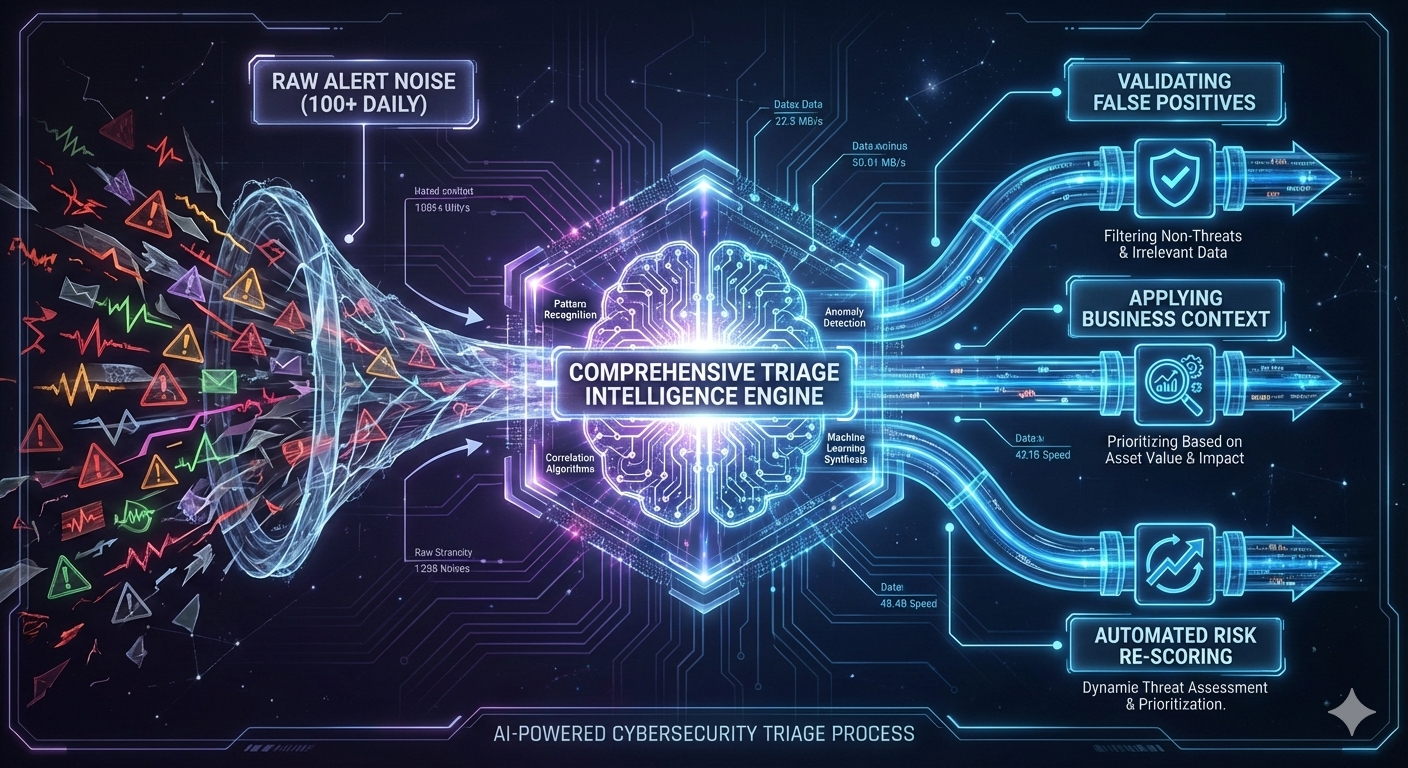

Every day, you make one of three judgments on each security alert before remediation even enters the picture:

1. False Positive: The scanner flagged something that isn't actually a vulnerability (35-40% of alerts)

2. Won't Fix: The vulnerability is real, but business context makes it acceptable risk (30-40% of alerts)

3. Risk Re-Scoring: The vulnerability is real but actual exploitability is much lower than the CVSS score suggests (10-20% of alerts)

That means 70-80% of alerts get eliminated through triage decisions (not remediation!). Only 20-30% require immediate action.

Yet your team still manually investigates nearly 100% of findings to figure out which 20-30% deserve attention. At 100+ findings daily, this manual investigation becomes the bottleneck that prevents remediation at scale.

The Three Triage Decisions Security Teams Make Every Day

Whether you see 100 findings or 10,000, you're making the same three calls over and over. The problem isn't the decisions themselves—it's the manual investigation required to make them.

"50, 60 to 70 to 80% of findings [we see] are false positives OR not important OR don't need to be fixed. The triage effort is entirely manual and requires expertise."

— Head of Application Security, Fortune 500 Financial Services Institution

That breakdown mirrors what we see across enterprise security teams.

One mid-market financial institution we work with broke down their SCA findings: 40% false positives, 30-40% where automated solutions existed, 10-20% requiring manual security engineer review.

But here's what happens when that volume becomes unsustainable:

"Before SonarQube, we used Fortify...when the well is already poisoned [by so many false positives], it's very hard to test developers' mind anymore."

— Principal Security Architect, Large Asia-Pacific Financial Institution (5,000+ developers)

That "poisoned well" effect—where alert volume overwhelms trust—is the hidden cost of manual triage at scale. Developers stop believing the tools because they've investigated too many false alarms. At that point, you're not just facing a triage bottleneck. You're facing a credibility crisis that no amount of manual investigation can fix.

False Positives: When Scanners See Ghosts (35-40%)

The scanner flagged something that looks like a vulnerability, but it isn't actually exploitable in your environment.

Here's a real example: A scanner identifies Log4j in your codebase and flags it critical. You investigate. It's a test dependency, never executed in production, completely unreachable from any external input.

This is the category most security teams think they've solved with reachability analysis. They haven't.

Reachability answers one question ("Can an attacker trigger this code path?"), but false positives emerge from dozens of causes like:

• Test dependencies flagged as production risks

• Libraries present but not invoked

• Vulnerabilities in code paths protected by upstream validation

• Scanner misidentification of language constructs

• Duplicate findings across multiple tools (the same issue flagged by Snyk, Veracode, and SonarQube)

Why reachability analysis isn't enough: Reachability is powerful for eliminating unused dependencies, but it only addresses the subset of false positives stemming from code path analysis. It doesn't handle scanner artifacts, duplicate alerts across tools, or vulnerabilities rendered unexploitable by architectural controls. For a team triaging 100+ daily findings, reachability might cut volume by 30-40%. You're still manually investigating the remaining 60-70%.

Won't Fix: Business Context Trumps CVSS (30-40%)

This is a business decision: the vulnerability is technically real, but compensating controls or business context make the risk acceptable.

Here's a real example: A scanner identifies a SQL injection vulnerability in a legacy admin interface—critical severity, exploitable, real. You investigate the system architecture. The vulnerable service runs on an isolated network segment, accessible only via internal VPN with multi-factor authentication, serving five administrators who've been with the company for 10+ years.

Technical risk: High. Business risk: Acceptable. Remediation decision: Won't fix (add compensating controls and document the risk acceptance).

Why ASPM can't fully solve this: Application Security Posture Management (ASPM) tools correlate vulnerabilities with business context—asset exposure, data sensitivity, blast radius. These are the right questions. But ASPM relies on external inference (cloud configurations, network topology, metadata) without visibility into code-level context. A vulnerability in an internet-facing API sounds critical—unless that API validates all input against a strict schema before the vulnerable code path executes. ASPM can't see that validation layer. You're still manually triaging 60-80% of findings to validate what ASPM prioritized.

Risk Re-Scoring: When CVSS Lies (10-20%)

This is a technical correction: the vulnerability is real, but the CVSS score doesn't reflect your environment's actual exploitability.

Take this example:

A scanner reports CVSS 9.8 (Critical) for a deserialization vulnerability. You investigate. The vulnerable endpoint lives behind an admin interface requiring MFA, accessible only to internal employees, and the exploit requires knowledge of internal object structures not exposed externally.

Actual risk in your environment: Medium or Low.

CVSS score says: Drop everything and fix immediately.

Why CVSS scoring fails here: CVSS standardizes vulnerability severity across industries and environments, creating a common language. But JFrog's research shows 88% of Critical CVEs aren't actually critical when you account for environmental factors. CVSS measures theoretical exploitability in a generic environment—it can't account for your authentication controls, network segmentation, architectural patterns, or monitoring capabilities. Your team must manually re-assess every high-severity finding against actual risk posture. For 100+ daily findings, that's another full-time equivalent just adjusting scores.

Why Current Approaches Fail: The One-Third Solution Problem

Notice the pattern?

• Reachability analysis handles some false positives

• ASPM addresses some business context decisions

• CVSS attempts risk standardization

Each tool solves one category—or part of one category—and leaves the other two requiring manual investigation.

Security teams deploy tools hoping to automate triage. The tools handle one-third of the problem. The other two-thirds still require human investigation at scale.

The problem compounds when organizations deploy an average of 5.3 security scanning tools hoping more coverage equals better security. Instead, they get:

• Duplicate findings across tools (the same vulnerability flagged three times)

• Conflicting severity assessments (CVSS 8.1 in one tool, 9.8 in another)

• No unified triage workflow (each tool has its own dashboard and ticketing system)

• Alert fatigue that causes teams to simply ignore the backlog entirely, creating a "security debt" spiral that no new tool can dig them out of

More tools don't solve the triage bottleneck. They multiply it by fragmenting attention and adding noise. 58% of organizations that experienced breaches had prevention tools in place—detection isn't the problem. The problem is capacity to triage findings at the scale modern development produces.

The Path Forward: Comprehensive Triage Intelligence

Manual triage has always been hard. What's changed is that it's now a business risk you can't afford to ignore.

AI is accelerating the problem at both ends. Developer tools now generate 34% of production code, with 48% of that AI-generated code containing vulnerabilities. At the same time, Anthropic demonstrated Claude automating 90% of hacker operations. Security teams now face an average of seven new zero-day vulnerabilities per week, while simultaneously triaging an ever-growing backlog of alerts from AI-accelerated code production.

Meanwhile, compliance and regulatory requirements are compressing response windows. NIS2 comes into force December 2025, imposing 24-hour disclosure requirements on 30,000 European organizations. The Pentagon moved thousands of defense contractors to mandatory third-party certification.

The gap between alert volume and triage capacity isn't just growing—it's accelerating.

The solution isn't better tools for one category of triage. It's intelligence systems that address all three categories simultaneously.

Comprehensive Triage Intelligence: Addressing All Three Categories

Intelligent triage automation must handle:

False Positives: Not just reachability analysis, but scanner artifact detection, duplicate finding consolidation across tools, and automated validation of whether flagged code actually presents risk given upstream protections.

Won't Fix Context: Integration with architectural controls (network segmentation, authentication layers, input validation), business criticality metadata, and risk acceptance workflows that let security engineers document "this is acceptable risk because..." without manual ticket-by-ticket justification.

Risk Re-Scoring: Automated adjustment of CVSS scores based on environmental context to handle authentication requirements, network exposure, monitoring coverage, and exploit complexity given your specific architecture.

The goal isn't eliminating human judgment. It's reserving human judgment for the 20-30% of findings that genuinely require expert security analysis.

What "Good" Looks Like: Five Characteristics

Fast: Triage at machine speed, processing hundreds of findings in seconds rather than days. When development velocity grows 10x, triage velocity must grow proportionally.

Accurate: High confidence in categorization (false positive vs. won't fix vs. re-scored vs. requires action) so teams trust the results rather than investigating every alert to validate the automation.

Explainable: Clear reasoning for every triage decision. "Flagged as false positive because test dependency" or "Re-scored to Medium because admin interface with MFA" or "Deprioritized because network segmentation prevents external exploitation." No black-box ML that teams can't validate.

Integrated: Works across your existing tool stack (Snyk, Veracode, Checkmarx, SonarQube, GitHub Advanced Security) without requiring tool migration or vendor lock-in. Triage intelligence should unify findings, not fragment them further.

Measurable: Quantifiable reduction in manual triage burden (time saved, percentage of findings auto-categorized, backlog reduction) so leadership can justify the investment and teams can see progress.

The Natural Bridge: Triage to Remediation

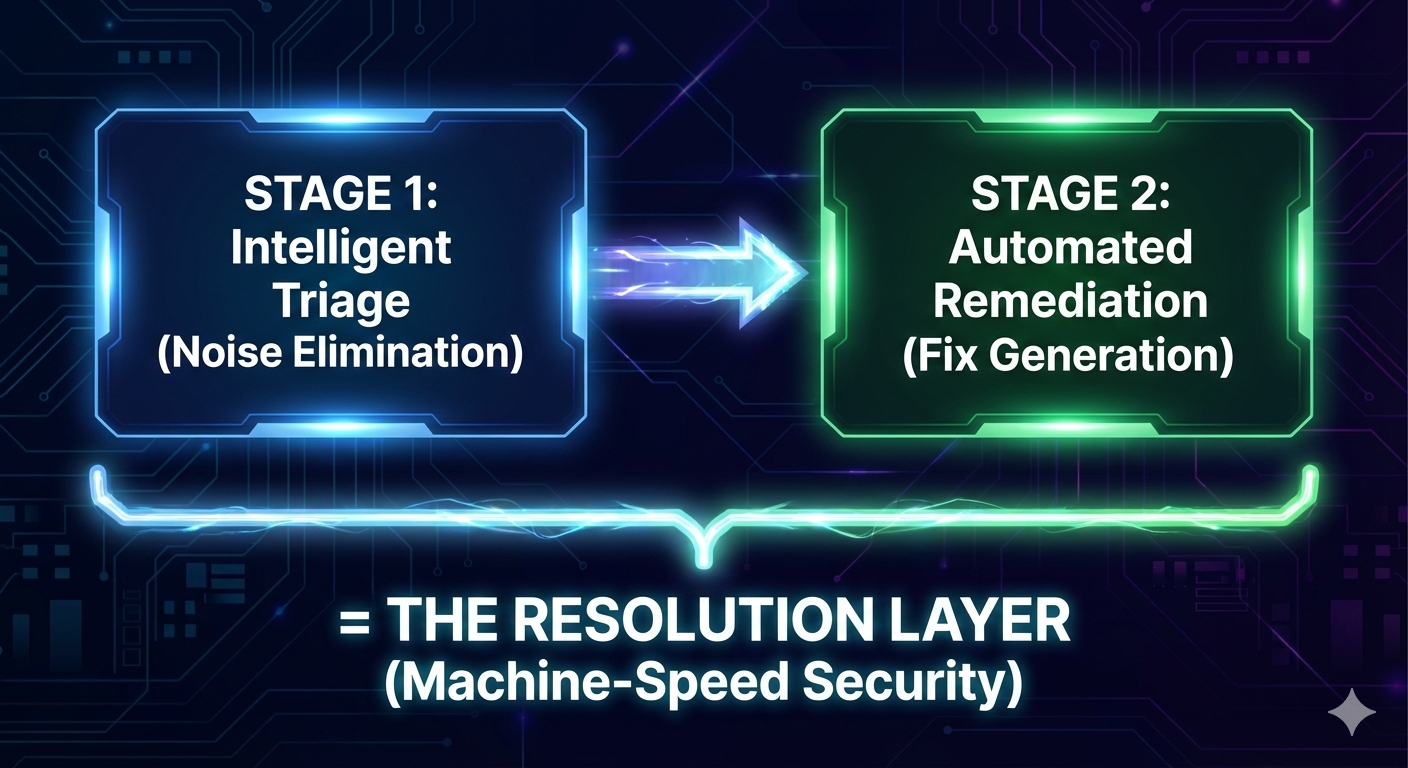

Intelligent triage creates a natural bridge to automated remediation. Once you know which 20-30% of findings require action, automated fix generation can address them.

We call this the Resolution Layer: A unified workflow where triage intelligence eliminates noise, followed by remediation automation that addresses signal. Not detection tools (you already have those). Not prioritization theater (you need ground-truth context). Automated triage plus automated remediation working together.

The customers we work with report significant reductions in manual investigation burden and measurable improvements in time-to-remediation. Not by replacing humans but by focusing human expertise on the problems that genuinely require judgment rather than grinding through 100% of alerts to find the actionable 20%.

Ready to see how intelligent triage automation can transform your security program?

Download: The Complete Guide to Automated Security Remediation → Explore the frameworks, evidence, and implementation patterns from organizations reducing manual triage burden by 70-80% through comprehensive automation.