The AppSec Maturity Model: From Detection to Resolution

Security teams have gotten exceptionally good at finding vulnerabilities, with the average enterprise running 5.3 scanning tools to do the job.1

But finding issues isn't the same as fixing them. Two-thirds of organizations report vulnerability backlogs exceeding 100,000 items,2 with critical flaws sitting unpatched for an average of 252 days.3 That's not a detection problem. That's security debt accumulating faster than teams can pay it down.

This isn't a failure of effort. What we see with our customers is that often it's a maturity gap in how teams tackle remediation.

As we've worked with enterprise security teams, we've observed a pattern in how organizations evolve their remediation capabilities which is the basis for a maturity model we briefly touch on below.

This model isn't prescriptive. Where you need to be depends on your regulatory environment, risk tolerance, and team structure. But understanding where bottlenecks typically emerge can help diagnose your own situation.

The Resolution Gap

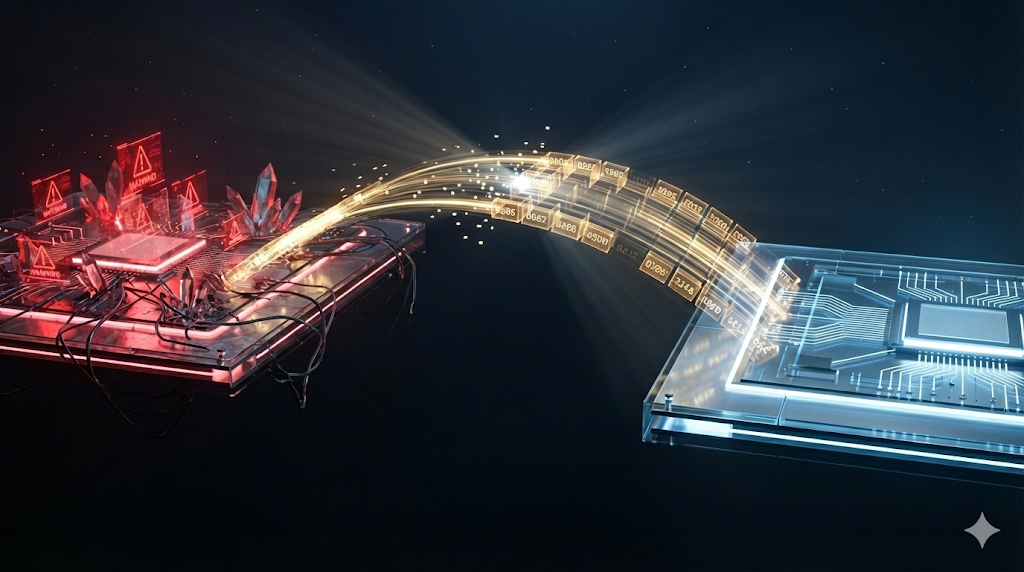

Scanners find vulnerabilities. Prioritization tools rank them. But historically nothing actually fixes them at scale. This gap between detection and remediation is where most organizations stall.

The math tells the story. Contrast Security found that an average application generates about 17 new vulnerabilities per month, while AppSec teams only manage to fix about six.4 You're creating debt faster than you can pay it down.

The Four Levels of Enterprise Application Security Maturity

Level 1: Manual Triage and Remediation

Security analysts review findings in scanner consoles, manually investigate each alert, and coordinate fixes through tickets or ad-hoc requests to development teams.

Where you get stuck: Triage becomes the AppSec bottleneck. Industry research suggests false positive rates between 71-88% for many scanning tools,5 which means you spend significant time investigating alerts that turn out to be non-issues. Alert fatigue sets in. When you're triaging faster than you're fixing, the backlog naturally grows.

What actually helps:

• Scanner tuning to reduce scanner noise (most scanners ship with overly aggressive defaults)

• Security champion programs to distribute triage burden across development teams

• Clearer prioritization frameworks so you fix high-impact issues first

• Dedicated "bug bash" sprints to periodically clear backlog

• Better communication channels between security and development (async handoffs kill velocity)

Level 2: Reachability-Informed Prioritization

You add reachability analysis to filter scanner output, focusing remediation effort on vulnerabilities that are actually exploitable in your specific environment.

Reachability analysis verifies whether a vulnerability can actually be triggered by an attacker from an external entry point, filtering out theoretical vulnerabilities that can never be exploited in practice. In practice this is very difficult to get right and few vendors are there in terms of sophistication.

Where you get stuck: You now know what's real, but fixing still requires manual effort. Every validated vulnerability needs a developer to write the patch, test it, and get it through code review. The math improves (you're fixing real issues, not chasing quite as many false positives), but the fundamental capacity constraint remains. You need vulnerability triage automation to scale beyond this point.

The industry average is 100 developers for every one AppSec engineer.6 That structural deficit doesn't change just because you have better prioritization.

What actually helps:

• Automated fix suggestions from your existing scanners or IDE plugins

• Better developer tooling integration (if fixes require context-switching to a separate portal, they get ignored)

• Reducing friction in the remediation workflow (fewer approval steps, faster code review)

• Clear ownership models so vulnerabilities don't sit in limbo between security and development

• Metrics that track time-to-fix, not just vulnerability counts

Level 3: Automated Remediation (Cloud-Based)

AI-powered tools generate fix suggestions or pull requests automatically. High-confidence fixes flow directly to developers, reducing the manual work per vulnerability.

Where you get stuck: For many organizations, this works well, especially if they're only using one scanner (otherwise you get vendor lock and cross-platform integration headaches). But there are other potential problems lurking here.

Outside of tackling the multiple scanner issue we also see that regulated industries often hit data governance constraints. If your compliance requirements prohibit sending source code to external services for example, cloud-based AI remediation may not be an option regardless of its effectiveness.

The other common failure mode: low merge rates. If developers reject 80%+ of automated fixes because they don't match codebase conventions or break tests, you haven't solved the remediation problem. You've just shifted the bottleneck from "writing fixes" to "reviewing and rejecting fixes."

What actually helps:

• Evaluate your actual data residency requirements (some organizations find they have more flexibility than initially assumed)

• Track merge rates, not just "fixes generated" (a fix that doesn't get merged didn't fix anything)

• Look for tools that learn your codebase patterns rather than generating generic patches

• Integrate automated fixes into existing PR workflows so developers review them like any other code change

Level 4: Automated Remediation (Self-Hosted)

Self-hosted security automation with organization-controlled models. Same automation benefits as Level 3, but code and vulnerability data never leave your environment.

Who actually needs this: Organizations with strict data sovereignty requirements. Financial services under OCC/FINRA oversight. Healthcare under HIPAA. Government contractors under ITAR. Any enterprise where "AI models used on proprietary code must remain in our infrastructure" is board-level policy.

If you can use cloud-based tools without governance issues, Level 3 is typically simpler to operate. Level 4 adds infrastructure overhead that's only justified when sovereignty is a genuine requirement.

What makes Level 4 work:

• BYOM (Bring Your Own Model) architecture so you control the AI analyzing your code

• Pull-based integrations that don't require inbound firewall rules

• Automated compliance evidence generation (if you're at Level 4 for regulatory reasons, you'll need audit trails)

• Air-gapped deployment options for the most sensitive environments

Diagnosing Your Bottleneck

The maturity level that matters isn't the one you aspire to. It's the one where you're currently stuck.

How much time do you spend triaging vs. fixing? If the answer is "mostly triaging," you're at Level 1 with a noise problem. Investing in automated remediation before solving triage will just generate more noise. Consider reachability analysis or better scanner tuning first.

Do you know which vulnerabilities are actually exploitable? If you're fixing based on scanner severity ratings rather than real exploitability, you're probably spending effort on theoretical risks while actual attack paths remain open.

What's your merge rate on automated fixes? If you're using AI-generated fixes but developers reject most of them, the automation isn't helping. You need better fix quality, not more fix volume. Industry benchmarks for automated vulnerability remediation tools show merge rates varying from under 20% to over 70% depending on the tool.

Is data governance blocking your options? If you've evaluated cloud tools and legal/compliance said no, you have a sovereignty constraint. The question becomes whether self-hosted options justify the operational overhead, or whether improving your Level 2 practices is a better investment.

A Note on Maturity Models

Maturity models are useful for diagnosis but dangerous as scorecards. Level 4 isn't inherently "better" than Level 2. It's just more appropriate for certain contexts.

A startup with no compliance constraints and a small codebase might be perfectly well-served by Level 1 practices with good scanner tuning. A regional bank might find Level 2 with strong reachability analysis addresses their actual risk profile. A Fortune 500 financial institution with board-level AI governance mandates probably needs to get to Level 4.

The goal isn't to climb levels for their own sake. It's to identify where your current approach creates bottlenecks, and whether moving to the next level would actually address them.

References

This maturity model is based on patterns we've observed working with enterprise security teams at Pixee. We provide automated remediation at both cloud-hosted and self-hosted deployment options.

1. Ponemon Institute, "The State of Application Security 2024" ↩

2. Veracode, "State of Software Security 2024" ↩

3. Veracode, "State of Software Security 2024" (median time to remediation for high-severity flaws) ↩

4. Contrast Security, "State of Application Security 2025" ↩

5. False positive rates vary significantly by tool, configuration, and codebase. The 71-88% range is cited in multiple industry reports including Contrast Security and reflects patterns we see across enterprise environments. ↩

6. Industry surveys on AppSec staffing ratios; exact figures vary by sector but 100:1 is a commonly cited benchmark. ↩