$1.88M/Year on Triage Labor: The Hidden Cost Your AppSec Team Won't Tell You

Senior AppSec engineers earn $150,000-$200,000 per year to secure applications.

Yet 78% of security alerts go completely uninvestigated. They're not triaged, not resolved, just ignored. The alerts that DO get attention? Teams spend the vast majority of their time just figuring out what they mean, not fixing them.

This isn't an efficiency problem. It's a structural resource allocation failure that's costing organizations tens of millions in wasted salary spend.

The Hidden Salary Drain

The "80% of time spent on triage" translates to specific dollar costs.

A senior security engineer earning $175,000 per year (median for enterprise AppSec teams) translates to roughly $84 per hour. If that engineer spends 32 hours per week—80% of their time—on triage instead of remediation, you're paying $134,400 annually for vulnerability categorization work.

Not fixing. Categorizing.

Multiply this across a team of 14 AppSec engineers managing 500 developers (a ratio we see frequently in enterprise organizations), and you're spending $1.88 million per year on triage labor alone.

That's before considering the vulnerabilities that remain unfixed, the developer time wasted on false positives, or the business risk from the 78% of alerts that never get investigated at all.

"Hundreds of years of man hours of work in our backlog," one global professional services company told us. They weren't exaggerating—they'd done the math. At current triage rates, clearing their vulnerability backlog would require more security engineering time than they could hire in multiple lifetimes.

When your backlog is measured in centuries, you're not dealing with a staffing problem. You're dealing with an economic model that doesn't work. 66% of organizations are facing 100,000+ vulnerability backlogs—the math simply doesn't support manual triage at scale.

A 14-person AppSec team spending 80% of their time on triage represents $1.88M in annual labor costs—before considering the vulnerabilities that remain unfixed.

The Three Hidden Decisions Behind Every Alert

Triage consumes so much time because it's not one decision. It's three entirely separate intelligence requirements, each demanding different expertise.

A Fortune 500 financial institution captured this perfectly: "50, 60 to 70 to 80% of findings are false positives OR not important OR don't need to be fixed. The triage effort is entirely manual and requires expertise."

Notice the three categories embedded in that statement:

Every security alert requires three separate intelligence assessments, each demanding different expertise and organizational context.

Decision 1: False Positives (40% of findings)

Is this vulnerability even real? Your scanner reported an SQL injection risk, but code analysis shows the user input is validated three layers up the call stack. This isn't a deprioritization decision—the vulnerability simply doesn't exist. But proving that requires understanding the scanner's detection logic, tracing data flow through your codebase, and validating that your compensating controls actually work.

A mid-market financial services organization broke down their SCA findings: 40% were false positives requiring technical validation. Not "low priority"—factually incorrect alerts that consumed senior engineering time to disprove. 10x better reachability analysis eliminates false positives by determining whether vulnerable code paths are actually executable in your application.

Decision 2: "Won't Fix" (30-40% of findings)

This vulnerability is real, but business context makes it acceptable risk. An XSS vulnerability in an internal-only admin tool behind three authentication layers, accessed by 12 people, used twice per month. Real vulnerability. Acceptable risk given mitigating factors.

These decisions require understanding deployment architecture, access controls, data sensitivity, compensating controls, and business risk appetite. Technical analysis alone isn't sufficient—you need organizational context that doesn't live in your scanning tools.

Decision 3: Risk Re-Scoring (10-20% of findings)

Your scanner called it "Critical," but is it actually critical in YOUR environment? Research from JFrog reveals that only 15% of CVEs are exploitable more than 80% of the time, and 88% of "Critical" CVEs aren't actually critical when evaluated in deployment context.

CVSS scores vulnerabilities in isolation. Risk re-scoring evaluates: Is this code path reachable? Is the vulnerable function exposed to untrusted input? Are there network controls preventing exploitation? Is this even deployed in production?

These three decisions explain why triage requires "expertise" as that financial institution noted. Each category demands different domain knowledge: technical depth for false positives, architectural context for won't-fix decisions, exploitability assessment for risk re-scoring. That expertise is scarce, expensive, and being consumed entirely by categorization work. Enterprise AppSec teams remain trapped in manual triage cycles precisely because triage bottlenecks prevent progression to strategic security engineering.

Why Current Approaches Make It Worse

The industry's response to triage problems and alert fatigue has consistently made them more expensive, not less.

The Tool Sprawl Paradox

Organizations average 5.3 security scanning tools per team. The logic seems sound: more scanners mean better coverage. The reality: 71% of organizations report that 21-60% of security test results are just noise—duplicates, conflicting findings, and false positives requiring human arbitration.

Each scanner has its own false positive profile, risk scoring methodology, and alert semantics. When Veracode flags something "Critical," SonarQube rates it "Medium," and Snyk calls it a false positive, you've created a new triage job: scanner arbitration. As a Fortune 50 financial institution described it: "You have multiple scanning tools...how do you determine which one is the right set to [trust]...that has to involve humans, and then you slow down the whole process."

More tools without triage intelligence is like adding more fire alarms without a fire department. You've amplified the signal, not improved the response.

ASPM's Prioritization Theater

Application Security Posture Management tools emerged as the industry's answer: better prioritization. Consolidate findings from multiple scanners, apply risk scoring, generate a refined list of "what actually matters."

But prioritization isn't triage. ASPM creates a shorter list—you still need manual evaluation of each finding across all three triage categories. A "Top 100" list feels more manageable than 10,000 alerts, but consider the math: 100 findings × 3 triage decisions × 2-4 hours of expert time per decision = 600-1,200 hours of manual work. At $84/hour, that's $50,400-$100,800 in labor cost. Per prioritization cycle.

ASPM shifts the bottleneck—it doesn't eliminate it.

Shift-Left's Strategic Collapse

Shift-left promised to solve AppSec scaling by bringing security "closer to developers." Bring the tools into IDEs, catch vulnerabilities before they reach production, reduce downstream triage burden.

A large government contractor captured the reality: "Shift-left failing"—IDE plugin adoption issues and "recommendations not as reliable as expected." Developers were uninstalling or disabling the tools.

Shift-left amplified the false positive problem because developers lack triage expertise. IDE plugins generate more noise, not more signal. Organizations spent billions implementing a strategy that assumed detection was the bottleneck. Triage was the bottleneck. Shift-left moved the noise closer to people with less ability to filter it.

Reachability's Partial Solution

Reachability analysis represents the most sophisticated answer to date: use code analysis to determine whether a vulnerability's code path is actually executable in your application. If the vulnerable function is never called, it's not exploitable—at least not via that path.

This solves the false positive problem (Decision 1) effectively. It doesn't address business context (Decision 2) or exploitability assessment (Decision 3). A reachable vulnerability in an internal admin tool behind multiple authentication layers may still be acceptable risk. Reachability says "fix it," business context says "deprioritize."

Tools solving one of three triage categories still force organizations to manually perform the other two-thirds of triage work. Which explains why even organizations with sophisticated reachability analysis still report massive triage burdens. Comprehensive triage automation requires addressing all three decision categories simultaneously—not just one.

The Structural Problem

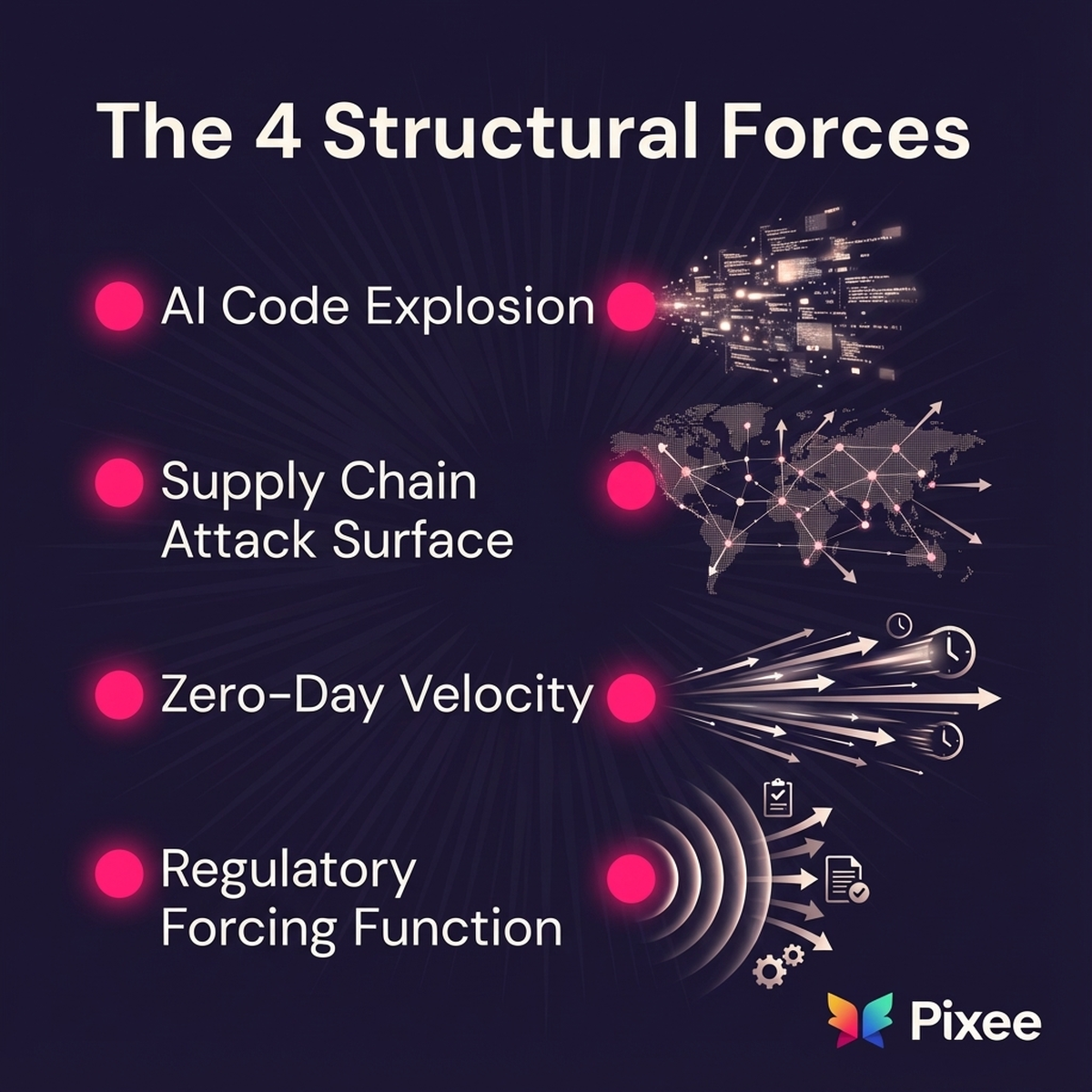

The triage crisis isn't fixable with better prioritization or more sophisticated application security testing tools. Four structural forces are making the problem exponentially worse:

Four external forces—AI code generation, supply chain complexity, zero-day velocity, and regulatory expansion—are accelerating triage demands beyond human capacity.

AI Code Explosion

34% of organizations report that more than 60% of their code is now AI-generated, and 48% of that code contains vulnerabilities. But AI-generated code doesn't just create MORE vulnerabilities—it creates DIFFERENT vulnerability patterns that traditional scanners weren't trained to evaluate.

False positive profiles shift. Exploitability assumptions break. Risk scoring based on historical patterns becomes unreliable. This isn't volume increase—it's categorical shift requiring re-architecting triage intelligence. The triage problem you optimized for last year no longer matches the code you're analyzing this year.

Supply Chain Attack Surface

77% of your codebase is dependencies you didn't write, in languages you may not know, with transitive dependency chains you can't see. Each dependency brings its own vulnerability history. 33,000 new CVEs are disclosed annually.

"Won't fix" decisions now require understanding package maintainer behavior. Risk re-scoring requires analyzing code you don't control. False positives hide in dependency scanner heuristics that match version numbers without confirming vulnerable code is actually present in your build. Modern software architecture fundamentally changed what triage intelligence must cover.

Zero-Day Velocity

Seven zero-days demonstrated the response velocity gap in a single week recently: three Chrome vulnerabilities, Fortinet FortiWeb, XWiki, RondoDox botnet, Unifi Access, N-able N-central. When seven exploited vulnerabilities drop in a week, you must rapidly determine: (1) Is our scanner detecting a false positive or real instance? (2) Is this exploitable in OUR environment? (3) Can we accept this risk temporarily?

Mandiant reports that the average time from vulnerability disclosure to weaponization is 15 days. Manual triage takes weeks to months. The gap between threat emergence and triage completion is growing.

Regulatory Forcing Function

NIS2 expansion in the EU increases organizations under mandatory cybersecurity requirements from 2,000 to 30,000+ by December 2025, adding healthcare, transport, and manufacturing with mandatory 24-hour incident reporting.

Organizations that could previously ignore 80% of findings now face mandatory disclosure within 24 hours. This forces rapid, defensible triage decisions across all three categories. "We'll get to it eventually" is no longer viable. Triage becomes compliance-critical, not just operational efficiency. Organizations evaluating on-premises AI remediation options must also consider triage automation as part of their compliance architecture.

What Changes When Triage Is Automated

Consider what becomes possible when triage intelligence operates at scanner speed rather than human speed.

Time Reallocation

That $134,400 per engineer currently spent on triage—what if it went to actual security work? Building secure frameworks. Architecting defense in depth. Training developers. Threat modeling new features. Security engineering at scale requires strategic work, not categorization labor.

Scanner Output Becomes Actionable

Multiple tools stop being a problem when you can arbitrate their disagreements automatically. Veracode says "Critical," SonarQube says "Medium," Snyk says "False Positive"—automated triage applies the three-decision framework and produces a defensible answer with supporting evidence. Scanner interoperability becomes an asset rather than a burden.

Trust Restoration

The "poisoned well" effect—where poor false positive handling destroys developer trust across all security initiatives—gets reversed when triage is accurate. Developers learn that flagged issues are actually worth their attention. Precision builds credibility. Security becomes an enabler rather than an obstacle.

Security Gates That Don't Block Velocity

When a critical vulnerability blocks your release pipeline, automated triage can provide rapid, defensible answers: False positive with supporting analysis. Real but mitigated by network controls. Critical and requires immediate remediation. Organizations stop facing the impossible choice between security and velocity because triage happens at pipeline speed.

Backlog Becomes Manageable

"Hundreds of years" of backlog collapses to weeks when you're not manually performing three distinct analyses on every finding. The problem wasn't that you had too many alerts—it was that you had insufficient intelligence per alert. Automated triage doesn't reduce alert volume; it increases triage throughput by orders of magnitude.

The Real Hidden Cost

The hidden cost isn't the $1.88 million in annual triage labor for that 14-person team, though that's substantial. It's not even the vulnerabilities that remain unfixed while engineers categorize alerts, though that's the actual security risk.

The real hidden cost is strategic opportunity loss.

Every hour your AppSec team spends proving a false positive is an hour not spent building security into your SDLC. Every day spent arbitrating scanner disagreements is a day not spent training developers to write secure code. Every week wrestling with backlog prioritization is a week not spent architecting authentication systems that prevent entire vulnerability classes.

Security talent is scarce. Security expertise is expensive. When you deploy that talent on triage instead of security engineering, you're making an economic choice—likely without realizing you made it.

Organizations with "hundreds of years" of backlog don't have a staffing problem. They have a resource allocation problem driven by inadequate triage intelligence.

The alternative is automated triage intelligence that can perform all three decisions (false positive analysis, business context application, exploitability assessment) at scanner speed.

What to Do Next

Assess where YOUR team's time actually goes. Track a week of security engineering work and categorize it: How much time on proving false positives? How much on "won't fix" decisions? How much on risk re-scoring? How much on actual remediation?

If more than 60% is triage across those three categories, you have an architectural problem, not an operational one. The solution isn't hiring more engineers, better prioritization, or more scanning tools—it's triage intelligence that matches alert velocity.