The AppSec Maturity Model: Where Does Your Organization Fit?

Here's a pattern we see repeatedly: organizations invest heavily in security scanners, generate massive volumes of findings, and then... nothing happens. The vulnerabilities don't get fixed. The backlog grows. And security teams wonder why all that detection investment isn't translating into actual risk reduction.

The problem isn't detection. It's the gap between detection and remediation. We call this the Resolution Gap.

Scanners find vulnerabilities. Prioritization tools rank them. But nothing actually fixes them at scale. This gap is where most organizations stall.

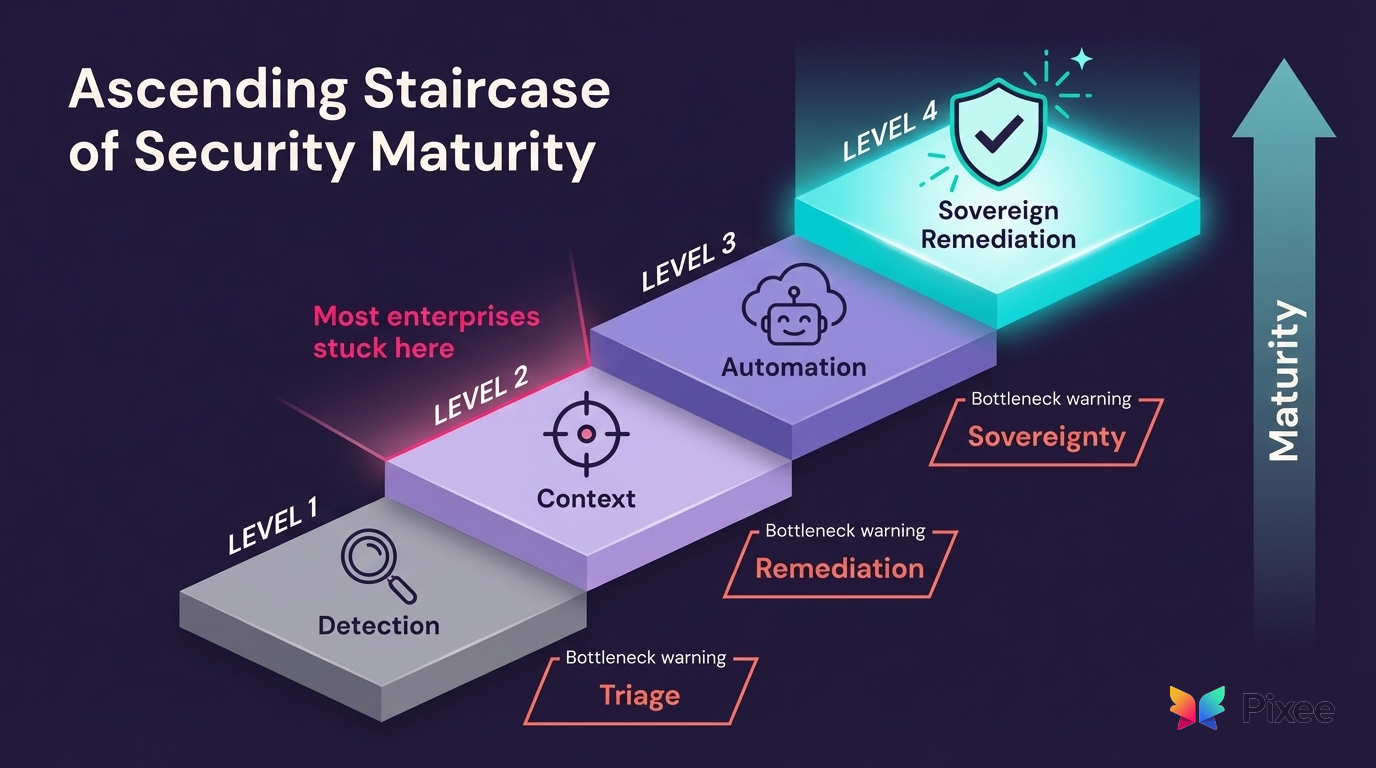

Understanding where your organization sits in this journey determines the path forward. We observe four distinct maturity levels, and most regulated enterprises find themselves stuck at the transition between stages, unable to progress without architectural changes.

Level 1: Detection (The Scanner Era)

State: High volume of findings from SAST/DAST/SCA tools

Primary Metric: Count of vulnerabilities found

The Bottleneck: Triage

At Level 1, you've invested in detection. Scanners are running. Findings are flowing. But your team is overwhelmed by false positives—often 80% or more of alerts are noise.

SCA tools compound this problem, generating 2-4x more findings than SAST. Transitive dependency vulnerabilities (77% of your dependency tree) amplify the triage burden exponentially. Without proper vulnerability management processes, application security programs struggle to separate real threats from scanner noise.

The result? Security teams spend their days investigating alerts that are nothing. Real vulnerabilities hide in the noise. And developers learn to ignore security alerts entirely—because most of them are wrong.

You're at Level 1 if: Your team measures success by "vulnerabilities found" rather than "vulnerabilities fixed."

Level 2: Context (The Reachability Era)

State: Reachability analysis applied to deprioritize theoretical risks

Primary Metric: Count of exploitable vulnerabilities

The Bottleneck: Remediation

Level 2 represents a significant advancement. You've implemented reachability analysis: verifying whether a vulnerability can actually be triggered by an attacker from an external entry point.

This filters out the 80% of theoretical vulnerabilities that can never be exploited in practice. Instead of drowning in noise, security teams can focus on what's real through effective vulnerability management.

But here's the problem: knowing what's real doesn't fix the code.

You've reduced your 100,000-item backlog to 20,000 genuinely exploitable vulnerabilities. That's progress. But 20,000 vulnerabilities still need human developers to write fixes, test them, and deploy them. At typical fix rates of 6 vulnerabilities per developer per month, you're still falling behind.

You're at Level 2 if: Your team can identify which vulnerabilities are real, but remediation velocity hasn't improved.

The Two Bottlenecks Blocking Progress

Before we discuss Levels 3 and 4, two bottlenecks prevent meaningful return on security automation investment:

| Bottleneck | Problem | Impact |

|------------|---------|--------|

|

| Remediation | <20% merge rates from automated fixes | Developers reject fixes; backlog doesn't actually shrink |

Most automation attempts fail at one of these two gates. Improving detection doesn't help if triage is broken. Generating fixes doesn't help if developers reject 80% of them.

Level 3: Automation (The SaaS AI Era)

State: Teams experiment with cloud-based AI tools (GitHub Copilot Autofix, etc.)

Primary Metric: Merge rate of automated fixes

The Bottleneck: Sovereignty

At Level 3, you've recognized that manual remediation doesn't scale. You've adopted cloud-based AI tools that generate vulnerability fixes automatically.

These tools work in environments where governance permits. For teams without strict data sovereignty requirements, Level 3 represents meaningful progress.

But for regulated enterprises, Level 3 creates an impossible choice.

These AI capabilities live in vendor clouds. They require sending proprietary source code to external infrastructure for analysis and fix generation. For financial institutions, healthcare organizations, government contractors, and any enterprise with strict data governance policies, this violates fundamental compliance requirements.

You're stuck at Level 3 if: Cloud AI tools could solve your remediation problem, but governance requirements prevent adoption.

Level 4: Sovereign Remediation (The Resolution Stack)

State: AI-powered automation running entirely on-premises with customer-controlled models

Primary Metric: Remediation Velocity (MTTR < 30 days)

The Advantage: Level 3 velocity with Level 1 control

Level 4 resolves the tension between automation and sovereignty. Instead of sending code to cloud AI, you bring AI to your code: deploying models within your own infrastructure, under your own governance.

This architecture delivers what regulated enterprises actually need:

• Intelligent triage that eliminates false positives (not just detection, but accurate classification)

• Fixes developers trust (context-aware, matching your codebase patterns)

• Audit evidence that proves remediation to compliance (generated automatically as a byproduct)

• Complete data sovereignty (no code, findings, or metadata leave your perimeter)

Level 4 isn't about replacing your scanners. It's about adding the "resolution layer" that transforms detection into deployed fixes.

You're at Level 4 if: Vulnerabilities get fixed automatically, developers merge AI-generated fixes routinely, and compliance evidence generates as a byproduct of remediation.

Where You Might Be Stuck

If you're at a regulated enterprise, you're likely stuck between Level 1 and Level 2.

You have scanners generating massive alert volume, but lack the contextual triage capabilities to separate real risks from noise. If you've achieved Level 2 maturity, you've hit the remediation wall: knowing what's real doesn't fix the code.

And Level 3 cloud tools aren't viable due to data sovereignty requirements.

This creates a frustrating stalemate:

• Boards mandate AI adoption for productivity

• Risk committees prohibit sending code to vendor clouds

• Security teams are stuck with manual processes that can't scale

Breaking Through

The path from Level 2 to Level 4 requires an architectural shift, not just a tool purchase.

Detection is commodity: compute is cheap and every scanner generates alerts. What's scarce is the combination of accurate triage (separating real risks from noise) and high-quality fixes (that developers actually merge). This "scarcity layer" is where competitive advantage lives.

Level 4 addresses this scarcity by bringing intelligent automation inside your governance perimeter. Fortune 50 financial institutions have proven this path works: 76% developer merge rates, MTTR reduction from 252 days to under 30 days, and 80% false positive elimination—all while maintaining absolute data sovereignty.

Understanding where your organization sits in the AppSec maturity model is the first step toward systematic improvement. The question isn't whether Level 4 is achievable. It's whether you'll invest in the architectural changes required to get there.

Where does your organization fit? [Take our AppSec Maturity Assessment] to identify your current level and the specific blockers preventing progress.